UBIQUITY OF CYCLICITY

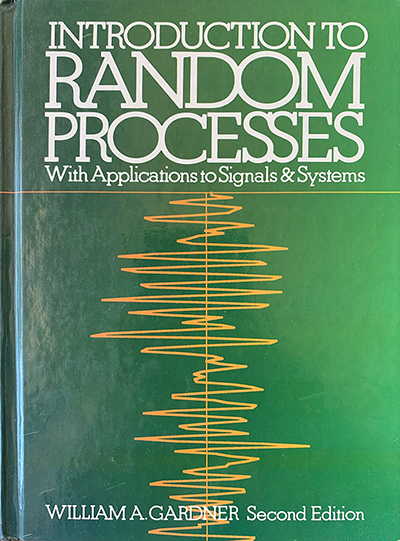

This website is motivated by observations regarding the Ubiquity of Cyclicity in time-series data arising in science and engineering dating back to my (WCM) doctoral dissertation from the University of Massachusetts, Amherst, under the direction of Professor Lewis E Franks, reporting on my research initiated in 1969—just after leaving Bell Telephone Laboratories, half a century before the construction of this website.

This Ubiquity of Cyclicity exists throughout what some refer to as God’s Creation: the World comprised of all natural phenomena on our planet Earth, our Solar System, our Galaxy, and the Universe; and it also exists throughout much of the machinery and process comprising mankind’s creation: technology in the form of electrical, mechanical, chemical, etc. machinery and processes. Because of this Ubiquity of Cyclicity, we find that a great deal of the observations, measurements, and other time-series data that we collect, analyze, and process in science and engineering exhibit some form of cyclicity. In the simplest cases, this cyclicity is simply periodicity—the more-or-less-exact repetition of data patterns; but it is far more common for the cyclicity to be statistical in its nature. By this, it is meant that appropriately (WCM’s Note: this critical modifier is explained in this website) calculated time averages of the data produce periodic patterns that are often not directly observable in the raw (non-averaged) data. In some cases, the averaging may be performed over the members of a preferably-large set of individual time series of data arising from some phenomenon such as might be obtained by replication of some experiment, rather than repetition over time (appropriately), but this is most often not the case for empirical data.

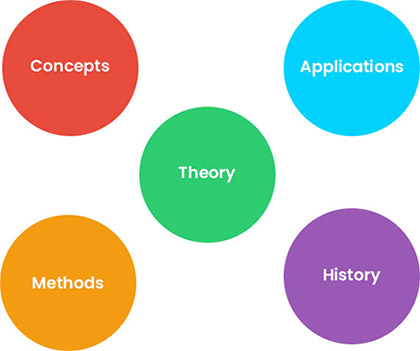

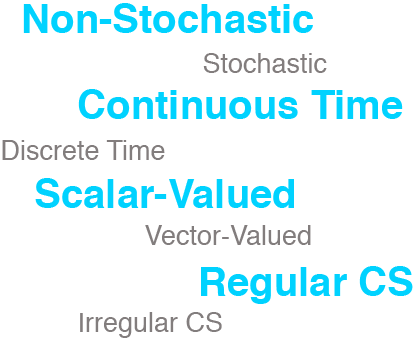

In many cases, it is found that the statistical cyclicity is regular: the statistics obtained by averaging (appropriately) long enough are essentially exactly periodic and, in this case, the time-series is said to be cyclostationary. But in many more cases the cyclicity is irregular. Roughly speaking, this means the period of the cyclicity of the statistics, such as short-term empirical means and variances, and correlations, etc., of the time-series data, changes over the long run in an irregular manner, which makes it quite difficult to perform averaging over the long term in the appropriate manner. The level of complication in time-series analysis and processing caused by irregular cyclicity was only recently, in 2015, reduced by the origination in Statistically Inferred Time Warping of theory and method for converting irregular cyclicity in time-series data to regular cyclicity. This recent breakthrough opens the door, for many fields of science and engineering, to much broader application of the otherwise now-firmly-established theory and method for exploiting regular cyclostationarity. Nevertheless, it does not address non-regular statistical cyclicity that is not irregular, as defined in this website: Irregular Statistical Cyclicity.

That being said, what exactly is meant by “exploiting cyclostationarity”? As explained in considerable detail in this website, this means using knowledge of the cyclic statistical character of otherwise erratic or randomly fluctuating time-series data to achieve higher performance in various tasks of statistical inference than could otherwise be obtained; that is, making more precise and/or more reliable inferences about the physical source of time-series data on the basis of processing that data in various ways generally referred to as “signal processing”. Such inferences may consist of detection of the presence of signals in noise, estimation of parameters of such signals, filtering such signals out of noise, identifying signal types, locating the source of propagating signals, etc. Some of the earliest such applications pursued by the WCM’s research team are surveyed on Page 6.

As an indication of how widespread exploitation of cyclostationarity in time-series data has become since its inception 50 years ago, a web search using Google Scholar was performed and reported in JP65 in April 2018, This search was based on just under 50 nearly-distinct applications areas in science and engineering, and the search terms were chosen to yield only results involving exploitation of cyclicity in time-series data. By “nearly distinct”, it is meant that the search terms were also selected to minimize redundancy (multiple search application areas producing the same “hits”). As shown in Table 1, the search found about 136,000 published research papers.

As another measure of the impact the cyclostationarity paradigm has had, Professor Antonio Napolitano, in Chapters 9 and 10 of his 2019 book Cyclostationary Processes and Time Series: Theory, Applications, and Generalizations, surveys fields of application of the cyclostationarity paradigm, and identifies on the order of 100 distinct application areas and cites about 500 specific published papers addressing these applications; his carefully selected bibliography on primarily cyclostationarity includes over 1500 published papers and books.

For a historical summary of the evolution of the mathematical modeling of cycles in time series data, from the 1700s to the early 2000s, see Page 4.1.

Table 1 Nearly Distinct Application Areas

| SEARCH TERMS1 | # of HITS | |

|---|---|---|

| 1 | "aeronautics OR astronautics OR navigation" AND "CS/CS" | 3,190 |

| 2 | "astronomy OR astrophysics" AND "CS/CS" | 864 |

| 3 | "atmosphere OR weather OR meteorology OR cyclone OR hurricane OR tornado" AND "CS/CS" | 2,230 |

| 4 | "cognitive radio" AND "CS/CS" | 8,540 |

| 5 | "comets OR asteroids" AND "CS/CS" | 155 |

| 6 | "cyclic MUSIC" | 512 |

| 7 | "direction finding" AND "CS/CS" | 1,170 |

| 8 | "electroencephalography OR cardiography" AND "CS/CS" | 742 |

| 9 | "global warming" AND "CS/CS" | 369 |

| 10 | "oceanography OR ocean OR maritime OR sea" AND "CS/CS" | 3,060 |

| 11 | "physiology" AND "CS/CS" | 673 |

| 12 | "planets OR moons" AND "CS/CS" | 274 |

| 13 | "pulsars" AND "CS/CS" | 115 |

| 14 | "radar OR sonar OR lidar" AND "CS/CS" | 5,440 |

| 15 | "rheology OR hydrology" AND "CS/CS" | 639 |

| 16 | "seismology OR earthquakes OR geophysics OR geology" AND "CS/CS" | 1.090 |

| 17 | "SETI OR extraterrestrial" AND "CS/CS" | 83 |

| 18 | autoregression AND "CS/CS" | 2,040 |

| 19 | bearings AND "CS/CS" | 3,980 |

| 20 | biology AND "CS/CS" | 2,030 |

| 21 | biometrics AND "CS/CS" | 309 |

| 22 | chemistry AND "CS/CS" | 2,020 |

| 23 | classification AND "CS/CS" | 10,900 |

| 24 | climatology AND "CS/CS" | 811 |

| 25 | communications AND "CS/CS" | 21,200 |

| 26 | cosmology AND "CS/CS" | 172 |

| 27 | ecology AND "CS/CS" | 356 |

| 28 | economics AND "CS/CS" | 2,050 |

| 29 | galaxies OR stars AND "CS/CS" | 313 |

| 30 | gears AND "CS/CS" | 2,000 |

| 31 | geolocation AND "CS/CS" | 676 |

| 32 | interception AND "CS/CS" | 2,270 |

| 33 | mechanical AND "CS/CS" | 4,770 |

| 34 | medical imaging OR scanning AND "CS/CS" | 1,370 |

| 35 | medicine AND "CS/CS" | 2,990 |

| 36 | modulation AND "CS/CS" | 17,000 |

| 37 | physics AND "CS/CS" | 4,539 |

| 38 | plasma AND "CS/CS" | 542 |

| 39 | quasars AND "CS/CS" | 47 |

| 40 | Sun AND "CS/CS" | 4,320 |

| 41 | UAVs AND "CS/CS" | 238 |

| 42 | universe AND "CS/CS" | 209 |

| 43 | vibration OR rotating machines AND "CS/CS" | 3,240 |

| 44 | walking AND "CS/CS" | 990 |

| 45 | wireless AND "CS/CS" | 15,100 |

| TOTAL | 135,628 |

1 “CS/CS” is an abbreviation for “cyclostationary OR cyclostationarity”