Science is a human endeavor created by humans and this endeavor is based on a critically important methodological foundation called The Scientific Method. The objective of this page is to expose the case for the startling argument that Human Nature is fundamentally at odds with the Scientific Method—a procedural concept created by humans! The detrimental influence of human nature on scientific progress is perhaps the greatest dilemma we face in this 21st century. Mankind’s technological progress may be creating threats to our own continued existence (in the case of climate change, this is considered unsettled science according to Steven E. Koonin, as of 2021), and a major key to confronting these potential threats is accelerated scientific progress. Yet, it is argued by some that we’re moving into the 2nd century of a dry spell in scientific breakthroughs.

So, what is it about Human Nature that gets in the way of a higher fidelity implementation of the Scientific Method? And is this Human Nature truly the inescapable nature of humans or is it just a default nature that can, in fact, be modified for the better through appropriate education?

It is argued on this Page 7 that the human brain, as it develops for the great majority of people, is a significant source of our disappointment, at least in part, with progress in science in recent times. The limits on the effectiveness of the human brain in making scientific progress seem to admit a convincing explanation in terms of an imbalance between left- and right-brain functionality. These terms are defined and discussed at length herein on page 7.6. This explanation also seems to reveal why the role that mathematics has played in science in recent times has too often been a deleterious one: mathematics that is beneficial to science cannot be done without a strong balance between left and right brain activity. Perhaps a realization of the validity of this explanation will eventually lead to improvements in education that are based on a recognition of the necessity of teaching that develops the functionality of both the left brain and the right brain (if indeed there is a physical dichotomy that is the source of the commonly accepted dichotomy in brain function; otherwise, we can still speak of left brain activity and right brain activity, which refer to types of brain function, not spatial location in the brain).

Yet, it is not unreasonable to ask if right brain activity development can be taught through formal education. Surely it presents challenges beyond those of training the left brain and this might well be a major contributor to the prevalence of training as opposed to education. It would appear that it takes a balanced brain to teach others’ brains to be balanced. Perhaps education is a conundrum. Without enough balanced brains teaching education, where will the next generation’s teachers with balanced brains come from? Are we stuck in a place where there’s not enough education to develop enough educators? If so, how long has this been going on? Is it possible that balanced brains develop in only a tiny minority of the population, and they develop largely independently of formal education? Is there a statistically stable percentage of balanced brains in the population that cannot be increased through formal education? Can formal education even enhance existing balanced brains, or is enhancement a consequence of only the balanced brain teaching itself?

These questions are taking us into brain science and the philosophy of education, areas in which I am not qualified to opine beyond what I have hypothesized above and maybe I have already gone too far there.

The thoughts expressed below on this page 7 dig into both the left-brain/right-brain explanation of ineffective thinking and other aspects of human nature that appear to be prime suspects for the cause of the lack of major breakthroughs in scientific progress.

The reader must be wondering at this point what this topic has to do with the subject of this website. This is more than hinted at in various places throughout the website, especially Page 3 where the resistance to Fraction-of-Time Probability Theory is dissected, found to be without merit, and dispensed with. This also is hinted at on the Home Page and elsewhere herein with regard to the resistance that the theory of Cyclostationarity originally met with. Having spent a good part of my career pushing back against such ill-conceived resistance, I have been motivated to seek explanations for what I found to be rampant cynicism in science in general. Skepticism is a healthy component of good science, but cynicism is often based on ignorance and is consequently counterproductive. Yet it pervades all science and might well be the key to the most substantive answer to the question “why is the pace of scientific progress so slow”? I submit that innovation—an obviously crucial component of scientific progress—is largely smothered by cynicism. I am not the first to believe this to be true. As demonstrated throughout this Page 7, many great thinkers throughout history have believed the same thing. This proposed fact has been recognized since the birth of science, but this recognition seems not to have resulted in enough being done to counter its impedance to scientific progress. It is my hope that the discussion here on this Page 7 will contribute to wider recognition of the need for action throughout the scientific community.

The following selection of quotations was compiled by the WCM for the Inaugural Symposium of the Institute for Venture Science, 25 September 2015.

David Hume, 1711 – 1776

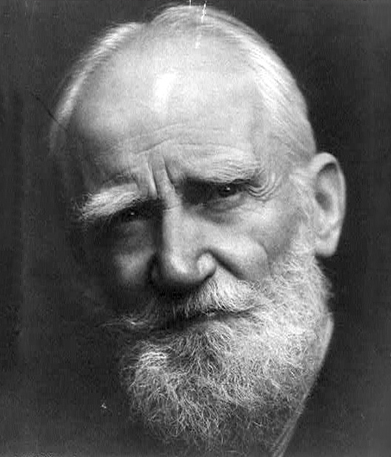

George Bernard Shaw, 1856 – 1950

Arthur Schopenhauer, 1788 – 1860

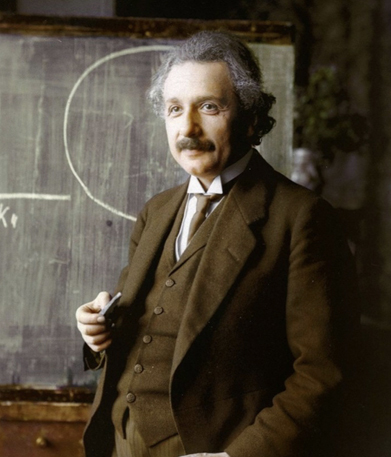

Albert Einstein, 1879 – 1955

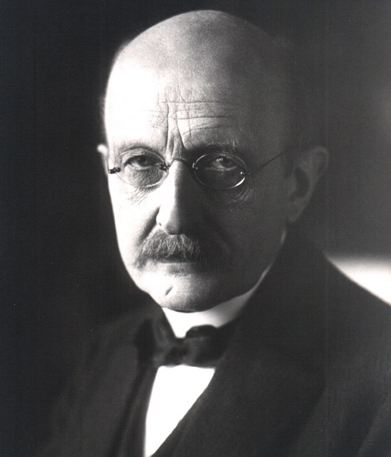

Max Planck, 1858 – 1947

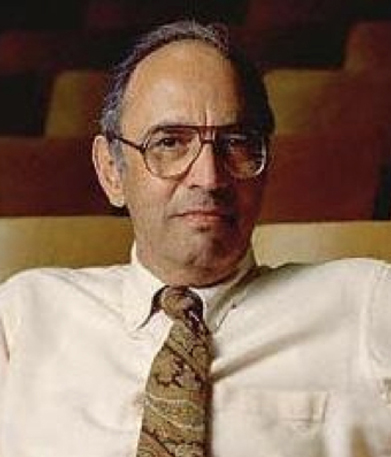

Thomas Samuel Kuhn, 1922 – 1996

Wilfred Batten Lewis Trotter, 1872 – 1939

Paul Bede Johnson, 1928 –

Ernest Rutherford, 1871 – 1937

Carl Sagan, 1934 – 1996

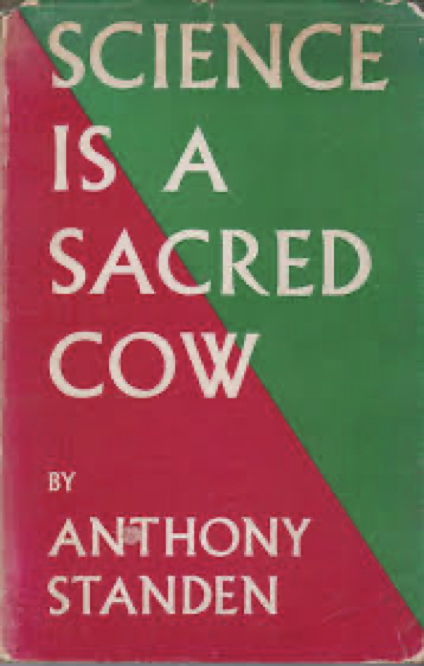

Anthony Standen, 1906 – 1993

Thomas Henry Huxley, 1825 – 1895

Letter (23 Nov 1859) to Charles Darwin a few days after the publication of Origin of Species

Simon Mitton, 1946 –

In Review of The Milky Way by Stanley L. Jaki, New Scientist, 5 July 1973

Frank J. Sulloway, historian and sociologist of science, wrote the book Born to Rebel in which he covers most major scientific changes and looks at which scientists backed the change and which did not. He tries to figure out what factors are most predictive of resisting or embracing change. He includes ideas that turned out to be validated and those that were debunked. Almost every major revolutionary breakthrough had some thinkers who rejected it as “crackpot” at first. His list includes:

Copernican revolution

Hutton’s theory of the earth (modern geology, deep time, gradual)

Evolution before and after Darwin

Bacon and Descartes—scientific method

Harvey and blood circulation

Newtonian celestial mechanics

Lavoisier’s chemical revolution

Glaciation theory

Lyell and geological uniformitarianism

Planck’s Quantum hypothesis

Einstein and general relativity

Special relativity

Continental drift

Indeterminacy in physics

Refutation of spontaneous generation

Germ theory

Lister and antisepsis

Semmelweis and puerperal fever

Epigenesis theory

Devonian controversy

The following was written by Founders Fund. [View Link1], [View Link2]

From Galileo to Jesus Christ, heretical thinkers have been met with hostility, even death, and vindicated by posterity. That ideological outcasts have shaped the world is an observation so often made it would be bereft of interest were the actions of our society not so entirely at odds with the wisdom of the point: troublemakers are essential to mankind’s progress, and so we must protect them. But while our culture is fascinated by the righteousness of our historical heretics, it is obsessed with the destruction of the heretics among us today. It is certainly true the great majority of heretical thinkers are wrong. But how does one tell the difference between “dangerous” dissent, and the dissent that brought us flight, the theory of evolution, Non-Euclidean geometry? It could be argued there are no ‘real’ heretics left. Perhaps we’ve arrived at the end of knowledge, and dissent today is nothing more than mischief or malice in need of punishment. But be the nature of our witches unclear, it cannot be denied we’re burning them. The question is only are our heretics the first in history who deserve to be burned?

We don’t think so.

We believe dissent is essential to the progressive march of human civilization. We believe there’s more in science, technology, and business to discover, that it must be discovered, and that in order to make such discovery we must learn to engage with new — if even sometimes frightening — ideas.

Every great thinker, every great scientist, every great founder of every great company in history has been, in some dimension, a heretic. Heretics have discovered new knowledge. Heretics have seen possibility before us, and portentous signs of danger. But our heretics are also themselves in persecution, a sign of danger. The potential of the human race is predicated on our ability to learn new things, and to grow. As such, growth is impossible without dissent. A world without heretics is a world in decline, and in a declining civilization everything we value, from science and technology to prosperity and freedom, is in jeopardy. [View Document]

People of science were repressed and persecuted by medieval prejudices for over 1500 years—more than 75 generations of mankind, with the exception of a brief reprieve during the Renaissance. In the two centuries since this suppression was largely overcome, science has had an immense positive impact on humanity.

Yet throughout this period, great scientists have consistently decried the penalty science is paying for not being practiced according to the Scientific Method, the essential operating principles of science. The low level of fidelity with which the Scientific Method is said to be followed today by both scientists and systems for administering science is most likely culpable for the quantifiable decline in the number and magnitude of scientific breakthroughs and revolutions in scientific thought over the last century—a decline unanimously confirmed by the National Science Board in 2006. Where are the solutions to today’s unprecedented threats to human existence: dwindling energy resources; diminishing supplies of potable water; increasing incidence of chronic disease—where are today’s counterparts to yesterday’s discovery of bacterial disease which led to antibiotics and of electricity which led to instantaneous worldwide communication and of other major breakthroughs?

The source of this unsolved problem has been recognized by many of science’s greatest achievers throughout history to be human nature: the ingrained often-subconscious behavioral motivations that sociologists tell us are responsible for our species’ very existence. The Scientific Method consists of systematic observation, measurement, and experiment, and the formulation, testing, and modification of hypotheses—all with the utmost objectivity. To implement this method with fidelity, scientists must be honest, impersonal, neutral, unprejudiced, incorruptible, resistant to peer pressure and open to the risks associated with probing the unknown. But to be all this consistently is to be inhuman. Thus, the Scientific Method is an unattainable ideal to strive for, not a recipe to simply follow—that scientists are true to the Scientific Method is argued to be a myth in Henry H. Bauer’s 1992 book The Myth of the Scientific Method.

Max Planck, the originator of the quantum theory of physics, said a hundred years ago, “A new scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die, and a new generation grows up that is familiar with it.” Thomas Kuhn, author of The Structure of Scientific Revolutions, written a half century ago, put forth the idea that to understand what holds paradigm shifts back, we must put more emphasis on the individual humans involved as scientists, rather than abstracting science into a purely logical or philosophical venture.

Considering that this problem source is inherent in people, it should not be surprising that we have not yet solved this problem: its source cannot be removed. Regarding his Theory of the Human condition, the biologist Jeremy Griffith’s said in the 1980s “The human condition is the most important frontier of the natural sciences.” But, in reaction, it has been predicted that no idea will be more fiercely resisted than the explanation of the human condition because the arrival of understanding of the human condition is an extremely exposing and confronting development.

Nevertheless, civilizations have indeed made progress toward correcting for undesirable effects of human nature on society, especially through social standards for upbringing, social mores, legal systems, and judicial processes. So, why have our systems for administering science, where integrity is so important, not been more successful?

Armed with insights provided by the science of Science, direct experience from careers of conducting and administering science, and humanitarian compassion, the founders of The Institute for Venture Science (IVS) formulated a thesis (www.theinstituteforventurescience.net/, https://ivscience.org/). This thesis goes to the heart of the problem—the specific reasons our present inherited systems for administering science are failing to support venture science—and it proposes specific solutions that reflect solutions that are working today in other human endeavors, such as incubators for venture capitalism. It faces head-on those aspects of human nature and also those systems of science administration that are evidently at odds with venture science—the key to major advances in our understanding of Nature. [View Document]

The IVS Operative Principles, paraphrased from the IVS website, are:

A prime example of what traditional administration and practice of science has not yet been able to deliver is an understanding of the physical origin of inertia, mass, and gravitation: This remains an outstanding puzzle. And the same is true for electric and magnetic fields: We can measure them, predict their behavior, and utilize them; but we still do not understand their origins.

Footnotes:

1. Note from Author, William A. Gardner: This essay is dedicated to my mother, the late Frances Anne Demma, born 100 years ago today, 20 September 2015.

2. This essay was distributed at The IVS Symposium in Seattle, 25 September 2015.

3. Research strongly suggests that the speed with which certain causes produce effects at a distance is not necessarily limited by the speed of light, but could actually be orders of magnitude faster—known colloquially as “warp speed” and fantasized by the writers of the TV series Star Trek, in which the Starship Enterprise featured a fictitious “warp drive”.

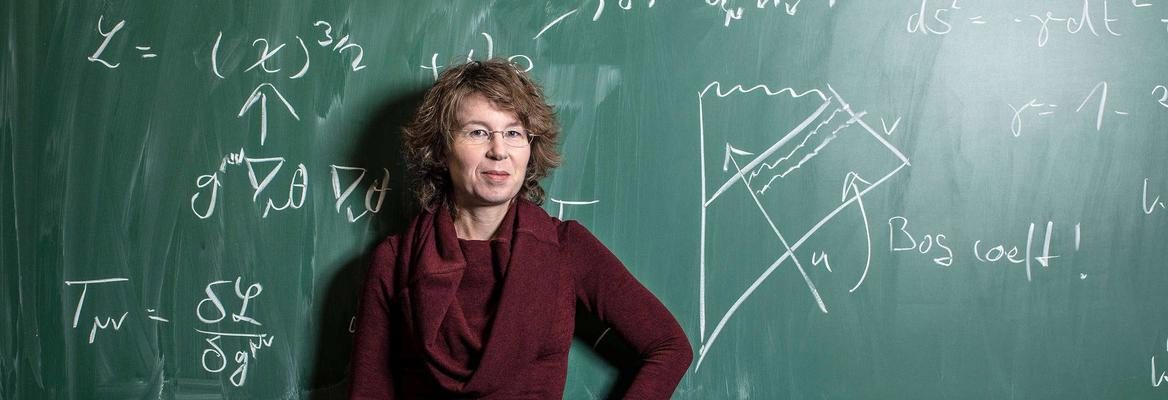

WCM’s note: Actually, the point of Page 7 is that this last century’s bogus achievement in physics prior to the last 40 years—the Standard Model—reflects the same disrespect for the Scientific Method that this author recognizes when looking at only the last 40 years. It’s much worse than she recognizes in this article: https://iai.tv/articles/why-physics-has-made-no-progress-in-50-years-auid-1292

IAI News – Changing how the world thinks. An online magazine of big ideas produced by The Institute of Arts and Ideas. Issue 84, 8 January 2020

Why the foundations of physics have not progressed for 40 years, by Sabine Hossenfelder,

Research fellow at the Frankfurt Institute for Advanced Studies and author of blog Backreaction

Physicists face stagnation if they continue to treat the philosophy of science as a joke

In the foundations of physics, we have not seen progress since the mid-1970s when the standard model of particle physics was completed. Ever since then, the theories we use to describe observations have remained unchanged. . . .

Excerpts from Hossenfelder’s Article:

The consequence has been that experiments in the foundations of physics past the 1970s have only confirmed the already existing theories. None found evidence of anything beyond what we already know.

But theoretical physicists did not learn the lesson and still ignore the philosophy and sociology of science. I encounter this dismissive behavior personally pretty much every time I try to explain to a cosmologist or particle physicists that we need smarter ways to share information and make decisions in large, like-minded communities. If they react at all, they are insulted if I point out that social reinforcement – aka group-think – befalls us all, unless we actively take measures to prevent it.

Instead of examining the way that they propose hypotheses and revising their methods, theoretical physicists have developed a habit of putting forward entirely baseless speculations. Over and over again I have heard them justifying their mindless production of mathematical fiction as “healthy speculation” – entirely ignoring that this type of speculation has demonstrably not worked for decades and continues to not work. There is nothing healthy about this. It’s sick science. And, embarrassingly enough, that’s plain to see for everyone who does not work in the field.

And so, what we have here in the foundation of physics is a plain failure of the scientific method. All these wrong predictions should have taught physicists that just because they can write down equations for something does not mean this math is a scientifically promising hypothesis. String theory, supersymmetry, multiverses. There’s math for it, alright. Pretty math, even. But that doesn’t mean this math describes reality.

Why don’t physicists have a hard look at their history and learn from their failure? Because the existing scientific system does not encourage learning. Physicists today can happily make career by writing papers about things no one has ever observed, and never will observe. This continues to go on because there is nothing and no one that can stop it.

A contrarian argues that modern physicists’ obsession with beauty has given us wonderful math but bad science. Hossenfelder also has written a book based on the above stated theme, which can be found here: https://www.youtube.com/watch?v=Q1KFTPqc0nQ

Whether pondering black holes or predicting discoveries at CERN, physicists believe the best theories are beautiful, natural, and elegant, and this standard separates popular theories from disposable ones. This is why, Sabine Hossenfelder argues, we have not seen a major breakthrough in the foundations of physics for more than four decades. The belief in beauty has become so dogmatic that it now conflicts with scientific objectivity: observation has been unable to confirm mindboggling theories, like supersymmetry or grand unification, invented by physicists based on aesthetic criteria. Worse, these “too good to not be true” theories are actually untestable and they have left the field in a cul-de-sac. To escape, physicists must rethink their methods. Only by embracing reality as it is can science discover the truth.

Iain McGilchrist’s book, The Master and His Emissary: The Divided Brain and the Western World, appeared in 2010. It offers deep insight into failures in education and science in terms of a model of the functioning of the human brain based on distinctions between the characteristics of the left brain and those of the right brain, and the nature of the interaction between the two. After some preliminary remarks below from the WCM regarding some of his personal experiences throughout his time in academia and contract research activities for the government that he thinks are explainable in terms of McGilchrist’s theory, references to treatment and discussion of this theory and excerpts from a review of McGilchrist’s book are provided. Directly below is an illustrative list of characteristics of the left and right sides of the human brain:

| Left Brain | Right Brain |

|---|---|

| fixed or controlled | free flowing, uncontrolled |

| expectation guided | follows music, time |

| sees only pieces | sees the whole |

| disconnected | connected |

| certain, explicit meaning | uncertain, implicit meaning |

| pin-point vision | fish-eye |

| slave or emissary | master |

| high-resolution local vision | broad global vision |

| analytical | creative |

| quick and dirty or black and white | devil’s advocate |

| utilitarian | humanitarian |

| isolated, like autism | empathetic |

| living within a model—the model is reality | distinguishing between model and reality |

I (WCM) was participating in the world of academia while on the faculty at the University of California, Davis, between 1972 and 2000, following eight years of study at Stanford University, Massachusetts Institute of Technology, and the University of Massachusetts, and a couple of years at Bell Telephone Laboratories between my MS and PhD studies. It was my perception during these nearly four decades that there are people with similar formal training and fields of study who react extremely differently to proposals for changes in ways of thinking about problem solving. These differences appear to me to coincide with the differences between what McGilchrist labels left-brain thinking and right-brain thinking.

It also occurred to me that the transformation of universities described in the book review below as “sad and misguided”, which had begun during my tenure in academia, might be related to a growing dominance of what today I am inclined to label unmoderated left-brain thinking.

My research over the last half century into the conceptualization and development of fundamentals of statistical Statistical | adjective Of or having to do with Statistics, which are summary descriptions computed from finite sets of empirical data; not necessarily related to probability. interpretation and analysis of data—especially data that is corrupted during conveyance by electromagnetic waves from one location to another—has often met with opposition or simply disinterest. But there are significant exceptions to this: my contributions to the subject of cyclostationarity have had a visible impact on progress in statistical time-series analysis in many fields of science and engineering (as discussed throughout this website), following a long (for me personally) period of disinterest. Yet, my closely linked contributions to the subject of Fraction-of-Time ProbabilisticProbabilistic | noun Based on the theoretical concept of probability, e.g., a mathematical model of data comprised of probabilities of occurrence of specific data values. m modeling of time series data (also discussed thoroughly in this website) has had little impact to date, since its inception 35 years ago. Today, I believe I can attribute this lack of response or defensive behavior to the influence of people with unmoderated left brains. (As a side note, I expect the recent work posted on Pages 3.2 and 3.3 to have an impact that might well positively influence adoption of the unconventional idea of Fraction-of-Time Probability).

With the thought that real stories capture readers’ interest, I considered including here some examples of what I interpreted to be signs of balanced and imbalanced brains, as McGilchrist puts it, from my past experiences with individuals. I had hoped I could at least make the point that the basis for my acceptance of McGilchrist’s model is experiential, not formal research. Nevertheless, I eventually decided that delving—even briefly—into some of the details of my experience was inappropriate for the primary educational objectives of this website. So, I chose to simply let the published debate involving two individuals, Hinich and Gerr, posted on Page 3.6 serve to illustrate what I consider to be transparent examples of weak right-brain thinking, and I have included below a few words about two other individuals—one of whom I consider to show strong signs of a balanced brain, and the other of whom gave me mixed signals. In addition, I provide here on page 7.8 brief comment on two recent and important scientific debates that may serve to further illustrate the conflict between right-brain thinking and left-brain thinking.

In 2013, on a lark after leaving the position of “Research Scientist Senior Principal” at Lockheed Martin’s Advanced Technology Center in Palo Alto, I attended an Electric Universe conference and met one of the broadest thinkers I have come to know—who also was attending on a lark. This was the late Dr. James T. Ryder (Jim), retired V.P. and head of Lockheed Martin’s Advanced Technology Center in Palo Alto, California, who quickly became my primary correspondent and confidant. Jim was the quintessential example of a healthy mind with what appeared to me to be excellent interaction between active left and right brains. Coincidentally, I found out during a lunch-time chat at the conference with Jim that it was he who in 2010, prior to his retirement, initiated Lockheed Martin’s purchase of my company’s intellectual property in 2011. For the next six years following the 2013 Electric Universe conference, I worked on better understanding electromagnetic phenomena, particularly in connection with mathematically modeling static field structures of hypothetical cosmic current, and I enjoyed regular correspondence and frequent retreats with Jim and his wife Janet at my wife Nancy’s and my home—ten acres of Redwood-forested wilderness surrounded by hundreds of acres of the same with 1930’s-vintage mini-lodge and guest cabin with knotty pine interiors and stone fireplaces, and Redwood-shingled exteriors in the Mayacamas Mountain Range west of the Napa Valley—affectionately called the Vista Norte Sanctuary for Cogitation. During that period, Jim was a principal of the Institute for Venture Science and founded the International Science Foundation specifically to fund 1) an experimental plasma research project—the first of its kind—which initially aimed at testing the hypothesis that the Sun is an electrical phenomenon that can possibly be replicated in a laboratory vacuum chamber and ultimately evolved into a project on energy production technology, and 2) other potentially-breakthrough science projects. This project produced astounding results that hold promise for changing the course of science and technology in the fields affected, including clean production of energy, clean heating, production of rare earth elements by transmutation, and remediation of nuclear waste (see www.safireproject.com).

In the spring of 2018, Jim passed away suddenly and unexpectedly, and the International Science Foundation was closed.

Among many more significant consequences for those for whom Jim had been a part of their lives, this sad event not only took from me a best friend, but also left me without what had been an interesting and undeniably qualified source of ideas and an interested sounding board for my ideas in the areas of space technology, astrophysics, and cosmology, among a variety of other topics (see Page 11.3). I had shared with Jim early drafts of what became the publication [JP65], wherein I reported my admittedly late discovery (made in 2015) of how to expand the cyclostationarity paradigm in engineering, which I created in my earlier life, to accommodate empirical Empirical Data | noun Numerical quantities derived from observation or measurement in contrast to those derived from theory or logic alone. time-series data with irregular cyclicity gathered throughout the sciences where natural systems predominate in contrast to the predominance of manmade systems in engineering which often give rise to regular cyclicity. Following Jim’s passing and this exciting discovery, I changed the balance in my work to favor reporting what I had already learned over decades past about time-series analysis instead of pushing ahead (?) with the much earlier-stage research into electric universe concepts in astrophysics which I had more recently been engaged in.

As my fourth example, I mention the accomplished and highly visible Robert W. Lucky of Bell Telephone Laboratories, as it was called when I worked there before the famous (or infamous, depending on your perspective) divestiture of AT&T. Lucky is someone deserving of much respect for the broad scope of his thinking and writing, among many other achievements and professional contributions. At the same time, he was among a number of reviewers between 1972 and 1987 who recommended against funding my research on cyclostationarity. In a reviewer’s report on one of my cyclostationarity research proposals submitted to the National Science Foundation, he wrote “it’s not even wrong”. His point was, evidently, that he could find no technical errors but could not see why the development of the proposed theory might be of any import. Of course, it is possible that my proposal was poorly written, but essentially all my research proposals on cyclostationarity for my first 15 years on the faculty at UC Davis went unfunded until the publication of my book [Bk2]. Thereafter, I fairly quickly became what I was told by a UC Davis administrator at the time was the best funded theoretician at UC Davis (see Page 10). It seems unlikely that the writing of a book (my second) suddenly made me so much of a better proposal writer that my proposal success rate went from nearly 0% for 15 years to nearly 100% for the next 15 years at UC Davis, followed by another 10 years of similar success at my engineering R&D company, SSPI (see Page 12). Rather, I think it is that the book finally got the statistical signal processing community (especially in communications and signals intelligence systems) interested in cyclostationarity by making such a strong and highly visible case for its utility that to deny this utility risked one’s reputation. Instead of my research proposals being viewed as outside of the mainstream, they were thereafter seen as defining a new branch of the mainstream. Although no conclusions can be drawn from this example, it is suggestive of the possibility that an individual’s mode of thinking (right-brain vs left-brain) may differ from one topic to another. For example, some practically oriented engineers I have met appear to actually be biased against theoretical work until it has been proven to be essential knowledge, which can take decades.

This is precisely the classic problem of Left-Brain thinking not being guided by Right-Brain thinking. It took a 566-page book to make the point that the development of cyclostationarity theory is a good idea. Of course, this sledge-hammer-to-the-head approach to getting the attention of those with tendencies toward left-brain thinking could never be taken in a research proposal. Therein lies the problem addressed on this Page 7: Breakthrough research is commonly rejected by left-brain thinkers who, all too often, wield authority they are not properly qualified to exercise.

Having struggled with many reviewers of my work who I felt were exhibiting what I imagine McGilchrist might call lopsided brains—light on the right side—and also having had the rewarding experience of actually collaborating with an exemplary individual exhibiting what I considered to be strong signs of a healthy right brain that productively interacts with a capable left brain, I consider myself to have some small amount of license to react to the book review below and to Iain McGilchrist’s brain theory, which I believe to be a reasonable basis for gaining valuable insight into what many consider to be a serious lack of progress in science—the pursuit of understanding of the natural world. Therefore, as WCM, I am including in this website the following links and I am encouraging those interested in fostering a scientific revolution to read the book review Cosmos and History: The Journal of Natural and Social Philosophy, vol. 8, no. 1, 2012 https://cosmosandhistory.org/index.php/journal/article/view/290/1271 (copied in part below), view the videos youtube.com/watch?v=dFs9WO2B8uI and youtube.com/watch?v=U2mSl7ee8DI and possibly others, and read some of the writings of Iain McGilchrist and the many serious reactions to it (see wikipedia.org/wiki/The_Master_and_His_Emissary). I believe his model can be very useful to those wanting to see badly needed fundamental change in the practice and results of science. Finally, I recommend the especially readable and relatively recent (2023) review of McGilchrist’s latest 3-volume series, entitled The Matter WithThings: Our Brains, Our Delusions, and the Unmaking of the World, which is accessible here.

Excerpts from review article THE MASTER AND HIS EMISSARY:

THE DIVIDED BRAIN AND THE MAKING OF THE WESTERN WORLD

By Arran Gare

Philosophy and cultural Inquiry

Swinburne University

agare@swin.edu.au Iain McGilchrist, The Master and His Emissary: The Divided Brain and the Western World, New Haven and London: Yale University Press, 2010, ix + 534 pp. ISBN: 978-0-300- 16892-1 pb, £11.99, $25.00.

EXCERPTS —

It is now more than a century since Friedrich Nietzsche observed that ‘nihilism, this weirdest of all guests, stands before the door.’ Nietzsche was articulating what others were dimly aware of but were refusing to face up to, that, as he put it, ‘the highest values devaluate themselves. The aim is lacking; “why” finds no answer.’ Essentially, life was felt to have no objective meaning. It is but ‘a tale, told by an idiot, full of sound and fury, Signifying nothing’. Nietzsche also saw the threat this view of life posed to the future of civilization. Much of the greatest work in philosophy since Nietzsche has been in response to the crisis of culture that Nietzsche diagnosed. Although the word ‘nihilism’ was seldom used, the struggle to understand and overcome nihilism was central to most of the major schools of twentieth century philosophy: neo-Kantianism and neo-Hegelianism, pragmatism, process philosophy, hermeneutics, phenomenology, existentialism, systems theory in its original form, the Frankfort School of critical philosophy and post-positivist philosophy of science, among others. William James, John Dewey, Henri Bergson and Alfred North Whitehead, Edmund Husserl, Max Scheler, Martin Heidegger, Maurice Merleau-Ponty and Ludwig Wittgenstein are just some of the philosophers who grappled with this most fundamental of all problems. Nietzsche, along with these philosophers, influenced mathematicians, physicists, chemists, biologists, sociologists and psychologists and inspired artists, architects, poets, novelists, musicians and film-makers, generating a much broader movement to overcome nihilism. Iain McGilchrist’s book builds on this anti-nihilist tradition, a tradition which is facing an increasingly hostile environment within universities and is increasingly marginalized. Although he does not characterize it in this way, The Master and his Emissary can thus be read as a major effort to comprehend and overcome the nihilism of the Western world.

–Pages 413 to 445 omitted–

EDUCATION AGAINST NIHILISM: REVIVING THE RIGHT HEMISPHERE This is a schematic account of a recurring pattern that can be found in organizations of all types and at all scales, from civilizations, nations, churches, business organizations and political parties (see for instance Robert Michels on the Iron Law of Oligarchy), and accounts for the recurring failure of political and social reformers. Those who have recognized this problem have tended to take more indirect routes to overcoming the ills of society. Very often, they have focused on education, hoping in this way to foster the development of better people, protect institutions and foster a healthier society. In this, they have often been successful, although their achievements in this regard are not properly acknowledged in a culture in which left-hemisphere values dominate. If we are to understand and overcome the advanced nihilism of postmodern culture, then, we need to look at the implications of McGilchrist’s work for understanding education generally and the present state of education, and what can be done about it.

Institutions of education, the institutions through which culture has been developed and passed on from generation to generation, have been central to the rise and fall of societies and civilizations. Generally, although not always, they have fostered the development of the modes of experience associated with the right hemisphere, countering the tendency for brains to malfunction. Paideia, a public system of education, was central to Greek civilization, exemplified this, and as Werner Jaeger showed in Paideia: the Ideals of Greek Culture and Early Christianity and Greek Paideia, had an enormous influence on later civilizations. Inspired by the Greeks (although not reaching their heights), the Romans developed the system of the artes liberalis (Liberal Arts), a term coined by Cicero to characterize the education suitable for free people, as opposed to the specialized education suitable for slaves. While this education degenerated in Rome, the artes liberalis became the foundation for education in the medieval universities. In the Renaissance, in reaction to the increasing preoccupation with abstractions of medieval scholastics, a new form of education was developed by Petrarch to uphold what Cicero called humanitas – humanity, reviving again a right-hemisphere world. This was the origin of the humanities. The University of Berlin established in 1810 under the influence of Romantic philosophy, placed the Arts Faculty, which included the humanities, the sciences and mathematics, with philosophy being required to integrate all these, at its centre. It was assumed that with the development of Naturphilosphie, science and mathematics would be reconciled with the humanities. Wilhelm von Humboldt, manifesting the values and sensitivities of a healthily functioning brain, characterized the function of higher institutions as ‘places where learning in the deepest and widest sense of the word may be cultivated’. Rejecting the idea that universities should be utilitarian organizations run as instruments of governments, he wrote that if they are to deliver what governments want,

… the inward organization of these institutions must produce and maintain an uninterrupted cooperative spirit, one which again and again inspires its members, but inspires without forcing them and without specific intent to inspire. … It is a further characteristic of higher institutions of learning that they treat all knowledge as a not yet wholly solved problem and are therefore never done with investigation and research. This … totally changes the relationship between teacher and student from what it was when the student still attended school. In the higher institutions, the teacher no longer exists for the sake of the student; both exist for the sake of learning. Therefore, the teacher’s occupation depends on the presence of his students. … The government, when it establishes such an institution must: 1) Maintain the activities of learning in their most lively and vigorous form and 2) Not permit them to deteriorate, but maintain the separation of the higher institutions from the schools … particularly from the various practical ones.

The Humboldtian form of the university, because of its success, became the reference point for judging what universities should be until the third quarter of the Twentieth Century and the values they upheld permeated not only education, but the whole of society. Despite the sciences embracing scientific materialism and hiving off from Arts faculties, this model of the university continued the tradition of supporting the values of the right hemisphere, including giving a place to curiosity driven research. It was protected from careerists by the relatively low pay of its staff and the hard work required to gain appointments and to participate in teaching and research.

The civilizing role of universities has now been reversed. People are simultaneously losing the ability to empathize, a right hemisphere ability, and to think abstractly, a left hemisphere ability. Society is being de-civilized, with people losing the ability to stand back from their immediate situations. What happened? The Humboldtian model of the university has been abandoned, arts faculties have been downsized or even abolished, science has been reduced to techno-science, and the ideal of education fostering people with higher values has been eliminated with education reconceived as mere investments to increase earning power. The whole nature of academia has changed. As Carl Boggs noted, ‘the traditional intellectual … has been replaced by the technocratic intellectual whose work is organically connected to the knowledge industry, to the economy, state, and military. Consequently, curiosity among students has almost disappeared (‘wonder’ disappeared a long time ago), with the amount of time students spend studying having fallen from forty hours per week in the 1960s to twenty-five hours per week today, with an almost complete elimination of self-directed study. Without the inspiration that comes from the right hemisphere, the left hemisphere fails to develop.

Through McGilchrist’s work, we can now better understand this transformation.

Universities were effectively taken over by people with malfunctioning brains. As universities became increasingly important for the functioning of the economy, an increasing number of academics were appointed with purely utilitarian interests. This provided an environment in which people with left hemisphere dominated brains could flourish and then dominate universities. Techno-scientists largely eliminated fundamental research inspired by the quest to understand the world, along with scientists inspired by this quest, thereby almost crippling efforts to develop a post-mechanistic science. It was not only engineering and the sciences that were affected, however. As universities expanded, arts faculties also were colonized by people with malfunctioning brains who then fragmented inquiry and inverted the values of their disciplines. Rejecting the anti-nihilist tradition that McGilchrist has embraced, most philosophy departments in Anglophone countries, and following them in continental Europe, were taken over by people who transformed philosophy into academic parlour games. Literature departments were taken over by people who debunked the very idea of literature. The humanities generally came to be dominated by postmodernists who rejected the quest to inspire people with higher values (as described by Scheler) as elitist. They called for permanent revolution – of high-tech commodities, thereby serving the transnational high-tech corporations who produce these commodities. Then, at a time when the globalization of the economy began to undermine democracy and the global ecological crisis began to threaten the conditions for humanity’s continued existence, careerist managers, with the support of politicians and backed by business corporations, took control of universities, transforming them from public institutions into transnational corporations, imposing their left hemisphere values in the process.

The consequences of this inversion of values were entirely predictable. Academic staff have been redefined as human resources, all aspects of academic life are now monitored, measured and quantified by managers in order to improve efficiency and profitability, and funding for research is now based on the assumption that outputs must be predictable and serve predictable interests. Success in resource management means that in the United States, tenured and tenure track teachers now make up only 35 per cent of the workforce, and the number is steadily falling, while senior management is getting bigger and more highly paid. Typically, between 1993 and 2007 management staffs at the University of California increased by 259 percent, total employees by 24 percent, and fulltime faculty by 1 percent. Nothing more clearly demonstrated that people’s brains were malfunctioning than academics failing to see what was coming and then failing to achieve any solidarity to defend themselves and their universities against managerialism, with academics in the humanities in this environment debunking their own disciplines on which their livelihoods depended. Basically, such academics could not even begin to defend the humanities, the quest to understand nature or uphold what universities were supposed to stand for because, deep down, they were already nihilists. Their failure paved the way for the rise of business faculties and the mass production of more managers.

Clearly, there is no easy solution to this. However, there is ample evidence that not only has this transformation of universities failed to deliver a more educated and productive workforce, the mass production of people with malfunctioning brains has begun to have an impact on virtually every facet of society, including the economy. This failure brings home the point that, for the left hemisphere to function, that which only the right hemisphere can deliver is required. People with healthy brains need to appreciate the threat of not only people with malfunctioning brains, but also their own potential. As McGilchrist suggests, the most important ability of humans is their capacity for imitation. Through imitation ‘we can choose who we become, in a process that can move surprisingly quickly.’ … We can ‘escape the “cheerless gloom of necessity”’ (p.253). A series of renaissances of civilization in Europe were built on this capacity. People picked themselves up from the ruins of the Dark Ages by looking back to the achievements of people in the Ancient World of Greece and Rome at their best, and imitating them, developed new education systems, new cultural and institutional forms and created a new civilization. In the ruins of the education system and the broader culture and society being created by people with malfunctioning brains it is time for a new renaissance, wiser than all previous renaissances because of what we can learn from their achievements and subsequent decay, and from what we can now learn from other civilizations, their inspiring figures and renaissances. As Slavov Žižek wrote in an entirely different context, it is necessary to ‘follow the unsurpassed model of Pascal and ask the difficult question: how are we to remain faithful to the old in the new conditions? Only in this way can we generate something effectively new. Hopefully, with this wisdom from the past we will be able to avoid a new Dark Age. McGilchrist’s book, providing new insights into the minds and modes of operation of those who undermine civilizations and a clearer idea of what constitutes healthy culture and the flourishing of civilization, is a major contribution to this wisdom.

References Cited in the above excerpts from this review:

Friedrich Nietzsche, The Will to Power, trans. Walter Kaufmann and R.J. Hollingdale, New York: random Random | adjectiveUnpredictable, but not necessarily modeled in terms of probability and not necessarily stochastic. House, 1968, p.9. Friedrich Jacoby had some intimation of this some hundred years earlier.

William Shakespeare, Macbeth, Act 5, Scene 5, 26-28.

Something else that people with left-brain dominance appear to be unable to take in. See Hans Joachim Schellnhuber, ‘Global Warming: Stop worrying, start panicking?’ PNAS, 105(37), Sept.16, 2008: 14239-14240. (http://www.pnas.org/content/105/38/14239.full.)

For a history of this, see Christopher Newfield, Unmaking the Public University: The Forty-Year Assault on the Middle Class, Cambridge, Mass.: Harvard University Press, 2008.

Chris Hedges, Empire of Illusion: The End of Literacy and the Triumph of Spectacle. New York: Nation Books, p.110 & 94.

See Aubrey Gwynn, Roman Education: From Cicero to Quintilian, Oxford: Clarendon Press, 1926, p.84ff. Falling short of the Greeks, the Romans gave no place to music or poetry, although Cicero famously defended the arts in his defence of the poet Aulus Lucinius Archia, who had been accused of not being a Roman citizen, in Pro Archia.

Wilhelm von Humboldt, Humanist Without Portfolio, Detroit, Wayne State University Press, 1963, p.132f.

Assault on the Middle Class, Cambridge, Mass.: Harvard University Press, 2008. 27 Chris 23 Carl Boggs, Intellectuals and the Crisis of Modernity, New York: SUNY Press, 1993.

Richard Arum and Josipa Roksa, Academically Adrift: Limited Learning on College Campuses, London: University of Chicago Press, 2011, ch.2.

When there is a problem with mathematics in Science, it often does not appear to be the mathematics that is problematic—it seems to be what we might call pseudo mathematicians. Many—maybe even most–users of mathematics (applied mathematicians), to be distinguished from creators of mathematics (pure mathematicians), appear to be pseudo mathematicians: they have been trained in some aspects of mathematics, and this has included the acquisition of some skills, but they have not been educated in applied mathematics: the manner in which the products of these skills are coupled with the real world often appears to be not understood.

The problem with pseudo mathematicians is that their attempts to apply their training to science too seldom produce a better understanding of nature. They often appear to not even recognize that their results indicate that their work is either mathematically invalid (e.g., not self-consistent) or is of no scientific value and is likely to be of no pragmatic value for any purpose.

To be sure, there have lived some great mathematicians over the centuries of recorded history, and some of those have made important contributions to science; but they apparently comprise a tiny minority of all those who have received training in mathematics and have attempted to use it to advance science. Even among some excellent mathematicians, there have been contributions to science that some would argue were more of a setback than an advance. As one example, I mention the work of Stephen Crothers, a mathematics/physics critic who offers arguments that the revered Albert Einstein made enough serious errors in his mathematics for significant parts of his theories of physics to be nonsense. On Page 7.8, there is an index for an ongoing debate between Crothers and his critics who argue they are debunking Crothers’ purported scientific contributions. Though I am not an expert in this physics topic, my discussions with colleagues engaged in progressive physics suggest that the critics are the ones in need of debunking. For those who choose to read this overview of the debate, I recommend first reading earlier sections of this Page 7 on human nature and the scientific method and on the left-brain/right-brain theory of brain function in scientific endeavors.

Robert H. Lewis, Professor of mathematics at Fordham University, expands on the above thoughts as follows (http://www.fordham.edu/info/20603/what_is_mathematics):

Training is what you do when you learn to operate a lathe or fill out a tax form. It means you learn how to use or operate some kind of machine or system that was produced by people in order to accomplish specific tasks. People often go to training institutes to become certified to operate a machine or perform certain skills. Then they can get jobs that directly involve those specific skills.

Education is very different. Education is not about any particular machine, system, skill, or job. Education is both broader and deeper than training. An education is a deep, complex, and organic representation of reality in the student’s mind. It is an image of reality made of concepts, not facts. Concepts that relate to each other, reinforce each other, and illuminate each other. Yet the education is more even than that because it is organic: it will live, evolve, and adapt throughout life.

Education is built up with facts, as a house is with stones. But a collection of facts is no more an education than a heap of stones is a house.

An educated guess is an accurate conclusion that educated people can often “jump to” by synthesizing and extrapolating from their knowledge base. People who are good at the game “Jeopardy” do it all the time when they come up with the right question by piecing together little clues in the answer. But there is no such thing as a “trained guess.”

No subject is more essential nor can contribute more to becoming a liberally educated person than mathematics. Become a math major and find out!

Professor Lewis wrote an excellent essay—posted at the site cited above—from which the above quotation was excerpted, addressing the question “what is mathematics?” Regarding the reception of this essay, he wrote the following:

“Around December 19, 2010, this essay was “discovered” somehow and attracted an enormous amount of attention. For many hours, the web page was getting a hit every second! This is very gratifying, and I am grateful for the overwhelmingly positive response. Many people said it is the best thing they have ever read on the subject of mathematics education.”

I have posted a copy of this 3300-word essay below, and I have prefaced it with a few words of my own about what I consider to be a key link between education in mathematics and Iain McGilchrist’s left-brain/right-brain theory of thinking. I assume the reader is familiar with McGilchrist’s concepts, which are reviewed here on Page 7.6. My words here should be taken as a hypothesis, not a claim of fact.

A sufficiently strong Left Brain (LB) thinker can be technically trained in math skills. In fact, sufficiently strong LB thinking is likely a prerequisite for being trainable in mathematics. But, without the complement of strong Right Brain (RB) thinking, education in mathematics is probably impossible. By analogy, some training in medicine and anatomy is essential for providing good medical care, but it’s no guarantee.

Yet, no matter how strong the RB thinking is, education in mathematics is probably impossible without the complement of strong LB thinking. By analogy, profound capability in RB thinking can greatly enhance medical diagnosis and care but, without sufficient medical training, success is unlikely.

The problem with mathematics in science is that too many of the applied mathematicians with a LB technically trained in mathematics appear to not have a sufficiently active RB to have become educated in mathematics—to be able to take a balanced approach to the more creative open-ended activity of modeling nature with mathematical equations and the more well-defined mathematical manipulation of the model’s equations to seek solutions to be used to reflect back on the efficacy of the created model vis-a-vis the real world. In Lewis’ essay below, he lays blame primarily on the educational system in America. Although his arguments are likely valid, it seems that each student (at least once the student has advanced to the college level) has the responsibility to dig deeper and to be motivated to genuinely seek Truth. The perspective presented in this website applies equally to students and teachers and all those working in science and engineering.

To sum up, effective utilization of mathematics requires at a bare minimum LB proficiency for the technical detail of mathematical manipulation and it requires RB proficiency to properly create a sufficiently-high-fidelity-mathematical model of reality and to critique that model with constant back and forth activity between LB and RB to ensure the results obtained, which the mathematical manipulation of the model provides, is consistent with reality and, if it is not, to ensure the model is made more realistic or errors in the mathematical manipulation are sought out in order to obtain results that are sufficiently consistent with reality.

It has been said that 20th century astrophysics has run amuck by being overwhelmed by LB activity that is insufficiently balanced with the RB activity needed to ensure that the mathematics is consistent with reality. Do black holes exist in the real world? What about dark matter? There are those who argue these are mathematical constructs that go outside the bounds of physics and should never have been mistaken for real physics. Assuming for the moment that Crothers’ claims that Einstein’s revolutionary work is invalid are not disproved over time, it could be argued that the purported flaws in his theories are attributable primarily to flawed RB thinking (although, according to Crothers’ identified mathematical errors, there was flawed LB thinking as well).

The relevance of this discussion to the primary subject of this website is the claim herein that science and engineering were done great harm by applied mathematicians’, engineers’, physicists’, and scientists’ apparently unquestioning adoption of the abstract stochastic process model FOR THE PUPOSE OF PROBABILISTIC ANALYSIS OF THE TIME-AVERAGE STATISTICS USED IN EMPIRICAL TIME-SERIES ANALYSIS, and consequent dismissal of the roots of the non-stochastic time-series model that came before, because eventual development of this earlier model proved it to be conceptually superior due to its actual concrete relevance in these applications to time-series analysis.

This misstep appears to reflect inadequate RB activity which would have been required to recognize the absence of a necessity to use such unrealistic and overly abstract models—something that has unnecessarily burdened teachers and students alike, and of course practicing engineers and scientists, with the challenge to each and every one of us to bring to bear the considerable RB activity required to make sense of the huge conceptual gap between the reality from nature of a single time-series of measured/observed data and the mathematical fiction of a typically-infinite ensemble of hypothetical time-series together with an axiomatic probability law (a mathematical creation) governing the ensemble average over all the fictitious time series. All these poor unsuspecting individuals were left to close this conceptual gap on their own, being armed with nothing more than a mathematical theorem, which only rarely can be applied in practice, that gives the mathematical condition on a stochastic process model under which its ensemble averages equal (in an abstract sense; i.e., with probability equal to 1) the time averages over individual time-series in the ensemble, regardless of any relationship these times averages may or may not have with actual measured finite-time averages made by the empiricist.

This condition on the probability law ensures that expected values of a proposed stochastic process mathematically calculated (a LB activity) from the mathematical model are approximated by finite-time averages measured from a single time-series member of the ensemble, assumed to be the times series that actually exists in practice. Unfortunately, the theorem—called the Ergodic Theorem—is typically of little-to-no operational utility because the condition on the probability law can only rarely be tested because either the test is intractable, or the stochastic process model is insufficiently defined. Thus, most time-series analysis users of stochastic process theory rely conceptually on what is called the Ergodic Hypothesis by which one simply assumes the condition of the Ergodic Theorem is satisfied for whatever stochastic process model one chooses to work with. Faith of this sort has no place in science and engineering.

In my opinion, acceptance of these abstractions, samples spaces comprising ensembles of time series, and going forward with the stochastic process concept as the only option for probabilistically modeling real time-series data requires abandonment of RB thinking. There really is no way to justify this abstraction of reality as a necessary evil. The fraction-of-time probabilistic model of single times series is an alternative option that avoids departing from the reality of measured/observed time-series data, its empirical statistical analysis, the mathematical modeling of the actual time-series, and the results of the analysis. The wholesale adoption by academicians of the stochastic process suggests low-level RB activity.

To be perfectly clear, it is explained on page 3 that there are two version of Fraction-of-time Probability models for single time-series: 1) that referred to above with are based on finite-time averages, and 2) an idealized version in which a mathematical model for the finite-time data is posited and the limit as the length of the time series models approaches infinity is adopted as the desire model. This motivation for this idealized version is fully explained on page 3. It can be said to be one step removed from reality because infinite time is unreal. But, it can be argued that this departure from reality is quite conservative compared with the stochastic process, particularly when there is no ensemble of time series in the application at hand.

So, is there a real problem with mathematics in science? It is argued here in the affirmative, but the blame is laid at the feet of the users of mathematics.

The world stage where the detrimental influence of human nature on scientific progress often plays out is comprised of scientific debates. There are quite a few websites on both current scientific debates and the history of scientific debates. As would be expected, the quality of contributions varies widely, some reflecting the detrimental influence of human nature to an extreme, typically cluttered with personal attacks, name calling, and little if any objective reasoning—particularly those presented by writers/talkers who consider themselves debunkers or science-based skeptics but are actually just cynics who often lack adequate education in the physical sciences; and others providing what at least appear to be credible arguments and much food for thought, including apparently appropriate citations to supporting peer-reviewed scientific research papers.

In this website, the only debate of direct interest is that addressed on page 3.6, regarding fraction-of-time probability vs stochastic probability models for time series data. But, on this page 7, a number of fields in which serious debates have occurred are mentioned and here, on page 7.8, one field of study has been chosen to illustrate the bad behavior of cynics who impede scientific progress: astrophysics and cosmology. Provided below is an index to the Crothers Debate in cosmology, but first the user is referred to the broader Electric Universe vs Plasma Universe debate addressed by Robert J Johnson.

The EU vs. PU Debate

Apparently credible argumentation not requiring multiple books and videos to present is given in Johnson’s article Why the ELECTRIC UNIVERSE® isn’t the same as the Plasma Universe and why it matters. Nevertheless, I am not able to state that Johnson’s arguments are valid and the debate in favor of the PU in contrast to the EU has been won, or otherwise, because I am not sufficiently well educated in astrophysics to take such a strong position. But, to the extent that the well-presented arguments provided by Johnson are not countered in a similarly scientific manner, the EU’s propositions that Johnson has criticized do not stand as strongly as they might otherwise.

A major new book on the Electric Universe Paradigm by the leading and talented principle of the EU movement, Wallace Thornhill—with an unusually significant number of successful astrophysical predictions to his credit—was forthcoming at the time this section 7.8 was written (2022). I had hoped it would explicitly address Johnson’s criticism (or any other researched and apparently well-thought-out scientific criticism) so we would have a more balanced view of the status of this debate. However, even more important, in my estimation, is the debate between the standard model of cosmology, which is based on the well-known gravity centric theory of astrophysics and the less mature model of cosmology, which is based on the still developing electromagnetism-centric theory of astrophysics.

The EU/PU vs. GU Debate

The presently evolving EU/PU theories, in which electromagnetic forces play the central role in Astrophysics, require the ubiquitous existence of plasma (clouds of ionized particles) throughout the universe. In contrast, the Gravitational Universe (GU) as we might call the Standard Model of the last century assumes the central role is played by gravitational forces. At this stage (the close of 2022), this ubiquitous presence of plasma has indeed been experimentally established beyond any doubt, and the now-visible fact that this plasma is carrying astronomical streams of electric current throughout the universe is no longer scientifically questionable.

The totality of books and essays and videos and all manner of information on the EU promulgated by The Thunderbolts Project is overwhelming in volume and consists of a mix of scientific contributions and contributions aimed at popularizing EU concepts for the benefit of the general public; so a new superseding book, based entirely on the scientific method, could go a long way toward providing an up-to-date accounting of the fundamental tenets of the EU paradigm and its core theory in whatever state of development it is presently. (It is assumed that the differences in the EU/PU debate will get resolved; so, for now, I use the terminology EU in place of EU/PU.)

While we await such a scientific treatment of the EU paradigm, readers of this website, many of whom are likely not astrophysicists, are referred to the very readable and free-of-charge book by the late Thomas Findlay, who passed away on 11 July 2021 in Ayr, Scotland. Findley’s book is one of a kind, written by an astute layperson for the layperson while still being as true to the science as his sources. His objective in making this contribution free to the World was to help spread the word of the proposed Electric Universe Paradigm—a fascinating concept that has been argued to be supported by much empirical evidence, but which—according to some—has not been scientifically proven to an extent that originators and long-time supporters of the standard cosmological model consider sufficient for them to accept. This resisted paradigm has the potential for immense ramifications; so, it is important that Findlay spread the word to lay people far and wide.

Consistent with the theme of this page 7, one wonders if the supporters of the status quo—the Gravity Centric Theory of Cosmology—have set the bar for acceptance, of the already-given proof of the ubiquitous electromagnetic activity throughout the universe, unreasonably high.

Note added 16 February 2023

Wal Thornhill passed on during the week of 7 February 2023. His book in preparation, which essentially everyone with interest in the Electric Universe paradigm has been anxiously awaiting, has not been completed. During Wal’s eulogy today, it was stated that he was a world leading theorist of Electric Universe cosmology, the Chief Science Advisor to The Thunderbolts Project, and a key science consultant for the Safire Project. Wal devoted a major part of the last several decades of his unusually productive life being fiercely determined to deconstruct the already-failed Gravity-Centric Theory of Astrophysics using the Scientific Method—a crucial requirement for real science that so many others have not honored as genuinely as they must if they wish to be considered scientists and truly contribute to the advancement of science. It was also stated in his eulogy—and has been said in one way or another by many of those most familiar with Wal’s work—that he will be seen as one of the chief architects of the approaching paradigm shift from the failed gravity-centric cosmology and largely erroneous gravitational theory of astrophysics to an immensely more successful electromagnetism-centric cosmology and a genuinely valid electromagnetic and plasma theory of astrophysics.

Wal is the quintessential example of a superior subspecies of Humans, now mostly extinct, referred to as Natural Philosophers. In my estimation, he clearly benefited from a balanced brain with demonstrated RB and LB capabilities.

Note added 4 April 2023

Wal’s work last year based on a careful analysis of published observations from the James Web Space Telescope has recently been published posthumously as a YouTube video. This work unequivocally proves the Big Bang Hypothesis and Red-Shift Theory upon which the hypothesis is based are both patently false. There is no scientific evidence of there ever having been a Big Bang, and the standard method of measuring the distance of stars from Earth has been wholly discredited. This major scientific advance will usher in the badly needed and overdue paradigm shift from the failed status quo to the hugely promising astrophysical models based primarily on the cosmic forces of electromagnetism, not those of gravity. The EU/PU vs. GU Debate, though relatively low profile due to the wrong-headed refusal of the GU proponents to participate as true science would demand, is being won by those who have shown more respect for the scientific method.

The Crothers Debate

As referred to on Page 7.7, included here is an index for the Crothers Debate. This index was prepared by Stephen Crothers, not by an independent 3rd party. Nevertheless, it appears to me that Crothers is the most careful writer which suggests to me that he may be the most careful thinker among those who have participated in this debate. (This is not to say that I (WCM) consider myself qualified to pass judgment on who is winning or has won this debate; this may take many years.) So, who better than Crothers to index this debate? What is surveyed is all posted on the web, so at least it has been and continues to be open to criticism. Some would consider Crothers’ writings to be disrespectful, in some places, but this seems unavoidable, given that he is arguing against figures who consider themselves authorities, show no tolerance for dissent, and are themselves disrespectful.

A brief perusal of the Internet reveals that Crothers has garnered little respect for his work. In keeping with the theme of this Chapter 7, this may be the strongest indication that he is onto something important… or not — time will tell.

1. William D. Clinger

A Caustic Critic – William D. Clinger http://www.sjcrothers.plasmaresources.com/Clinger.html

My Malicious, Gormless Critics http://www.sjcrothers.plasmaresources.com/critics.html

2. Professor Gerardus ‘t Hooft

Crothers, S. J., General Relativity: In Acknowledgement of Professor Gerardus ‘t Hooft, Nobel Laureate, https://vixra.org/pdf/1409.0141v2.pdf

Crothers, S. J., Gerardus ‘t Hooft, Nobel Laureate, On Black Hole Perturbations, http://vixra.org/pdf/1409.0141v2.pdf

My Malicious, Gormless Critics http://www.sjcrothers.plasmaresources.com/critics.html

3. Dr. Jason J. Sharples

Crothers, S. J., The Black Hole Catastrophe: a Reply to J. J. Sharples, http://vixra.org/pdf/1011.0062v1.pdf

Crothers, S. J., The Black Hole Catastrophe: A Short Reply to J. J. Sharples The Hadronic Journal, 34, 197-224 (2011), http://vixra.org/pdf/1111.0032v1.pdf

Crothers, S.J., Reply to the Article ‘Watching the World Cup’ by Jason J. Sharples, http://vixra.org/pdf/1603.0412v1.pdf

Crothers, S.J., Counter-Examples to the Kruskal-Szekeres Extension: In Reply to Jason J. Sharples, http://vixra.org/pdf/1604.0301v1.pdf

My Malicious, Gormless Critics http://www.sjcrothers.plasmaresources.com/critics.html

4. Dr. W. T. ‘Tom’ Bridgman

Something about Tom Bridgman http://www.sjcrothers.plasmaresources.com/Bridgman.html

5. Dr. Christian Corda

Crothers, S.J., On Corda’s ‘Clarification’ of Schwarzschild’s Solution, Hadronic Journal, Vol. 39, 2016, http://vixra.org/pdf/1602.0221v4.pdf

My Malicious, Gormless Critics http://www.sjcrothers.plasmaresources.com/critics.html

6. Dr. Gerhard W. Bruhn

Letters from a Black Hole http://www.sjcrothers.plasmaresources.com/BHLetters.html

My Malicious, Gormless Critics http://www.sjcrothers.plasmaresources.com/critics.html

7. Dr. Chris Hamer

Mr. Hamer never wrote anything about my work. All he did was have a short meeting with me in his UNSW office. At that meeting I asked him what the symbol r in the so-called ‘Schwarzschild solution’ represented. He told me that it is the radius. After that I terminated the meeting because his answer was incorrect. The symbol r is not the radius of anything in the said solution. Indeed, r2 is the inverse of the Gaussian curvature of the spherical surface in the spatial section, which I have proven mathematically (eg see the first appendix of my Acknowledgement of Professor Gerardus ‘t Hooft Nobel Laureate above). http://www.sjcrothers.plasmaresources.com/index.html

8. Dr. Roy Kerr

Mr. Kerr never wrote anything about my work. All he did was reply to me in an email saying in hostile tone that my work is rubbish (no proofs whatsoever). Any fool can do that so he counts for nothing, so I had nothing to respond to. http://www.sjcrothers.plasmaresources.com/index.html

9. Dr. Malcolm McCallum

Mr. McCallum only ever sent me emails, as I reported on my webpage. He angrily used condescending and insulting language so I lost my temper (unfortunately) and gave him some back in kind. That made him even more angry. http://www.sjcrothers.plasmaresources.com/index.html

10. Mr. David Waite

A Foolish Critic Named David Waite http://www.sjcrothers.plasmaresources.com/Waite.html

The Debate on the Structure of the Atom

The structure of the atom has been a subject of debate for over two centuries, and the quantum mechanics model of the atom has been at the center of debate for the last century. With the 2021 release of the book The Nature of the Atom: An Introduction to the Structured Atom model, coauthors J. E. Kaal, J. A. Sorenson, A. Otte, and J. G. Emmings have introduced a proposed paradigm shift that will likely be at the center of ongoing debate on this critically important subject for many years to come. The development of this new theory has links to the Electric Universe paradigm, itself a subject of intense debate. Undoubtedly, the many proponents of the Quantum Mechanics theory of the atom will fight ruthlessly in defense of its preservation. Viewers of this website are encouraged to follow this debate but to always keep in mind that human nature will play a central role in this debate to the detriment of scientific progress. In fact, the coauthors of this book have made the following statement in their Preface, which captures the essence of this page 7:

“In the introduction, we consider the rules of science we want to be measured against. There is nothing special about these rules, except that we do not follow the one rule that is never mentioned, never written down, never spoken about openly—the rule that currently dominates all other known rules of science, that is, work is scientific if and only if it follows currently accepted theory. If it does not do that and does not adhere to current wisdom, then this work has, by definition, severe scientific errors—it does not meet scientific standards and surely the proper scientific methods have not been used. What has been created is at best pseudoscience. This is the one, most unscientific rule of all, that sadly governs large parts of science today.”

Reality is often thought to be that which agrees with our 5 senses.

These senses enable us to perceive macroscopic matter and forces and, with the aid of microscopes and telescopes, even microscopic and astronomical matter and motion of matter, but not associated forces.

Although we can feel macroscopic forces, we cannot see them. We cannot see the mathematical constructs we call force fields—gravitational and electromagnetic.

So, very little of the stuff physics is made of is real, with respect to our senses.

This raises the question of what reality is. Since our theories of matter and forces are not settled neither is the answer to this question. And, even if we ever reached that state of believing our theories were settled, would we know what reality is?

The world—the Universe—is much more than matter. Matter does not exist without the forces that bind its components together and the forces that create dynamics—movement at all scales, rotation, revolution, flow, expansion, contraction . . .

The essential component of our World is force. Without it, there would be no life. Without life, no concept of reality.

Yet force is the quintessential unknown. We have no idea what creates the force of gravity, what makes electric charges repel or attract each other, or what creates magnetic force. Our explanations are only descriptions of observed behavior, with no proven concept of why this behavior occurs.

Everything we perceive or think is a concept or a hypothesis—it is not reality. A concept is, in essence, a model—a model of reality. We know and use many models in our existence, and we do so as if these models were reality, but they are not.

Here lies the basis for the ubiquitous miscommunication among people that is the source of essentially all human conflict: People whose models of reality differ from each other are destined to miscommunicate.

Does reality even exist? If not, what is the true purpose of science? If it is not to discover reality, it would seem the purpose is to continually refine our models to enable us to more powerfully manipulate our World, ostensibly to our benefit but, unfortunately and too often, to our loss: man-made nuclear explosions, man-made climate change, fouled soil, water, and air, forced extinction of species, toxic processed foods, health degradation resulting from inactivity due to automation. There is so much we do in the name of progress that takes a toll.

Perhaps that’s all reality is: the realization that we are destined to destroy ourselves as a result of our presumption, our misguided confidence, our ignorance that leads us to believe we can improve on nature—something we know so very little about.

To balance this view of science, I hasten to mention that science HAS benefitted humanity in many ways, even though our concepts of scientific truth—a misnomer—are not constants: they are in a continuing state of evolution.