IEEE Signal Processing Society defines the field of Signal Processing as follows:

Signal processing is the enabling technology for the generation, transformation, extraction, and interpretation of information. It comprises the theory, algorithms with associated architectures and implementations, and applications related to processing information contained in many different formats broadly designated as signals. Signal processing uses mathematical, statistical, computational, heuristic, and/or linguistic representations, formalisms, modeling techniques and algorithms for generating, transforming, transmitting, and learning from signals.

Within this broad field, various types of subdivisions are made. Among these is the subdivision according to the signal type. Signals can be classified according to the variety of their physical origins, ranging over the various fields of science and engineering. But here, we consider classification according to properties of their behavior defined in terms of mathematical models. Some of these classes are listed here. There are signals with finite energy—signal functions who’s square when integrated over all time is finite. In many cases this type of signal is also nonzero over only finite-length intervals of time. A complementary class of signals is comprised of those with finite time-averaged power—signal functions who’s square, when averaged over all time, is finite. Such signals must be persistent, they cannot die away as finite-energy signals must. The class of finite-average-power signals can be partitioned into the subclasses of what are called statistically stationary or cyclostationary or polycyclostationary or otherwise nonstationary functions of time. These classes are defined at the beginning of the Home page.

This introduction to cyclostationary signals is set in motion using the presentation slides from the opening plenary lecture at the first international Workshop on Cyclostationarity. Some readers may wonder why this is appropriate considering that this workshop was held 30 years ago (in 1992)! I consider this appropriate because I developed these slides specifically for a broad group of highly motivated students. I say they were students solely because they traveled from far and wide specifically to attend this educational program. In fact, the participants of the workshop were mostly senior researchers in academia, industry, and government laboratories. Knowing the workshop was a success and knowing all the topics covered are as important today as they were then, I have chosen this presentation as ideal for the purpose at hand here. Sections I, II, III, and V are reproduced below. Section IV is reproduced on Page 3.2.

Following these presentation slides are reading recommendations for the beginner, including internet links for free access to the reading material such as books and articles/papers from periodicals.

Click on the window to see all pages

Reading Recommendations

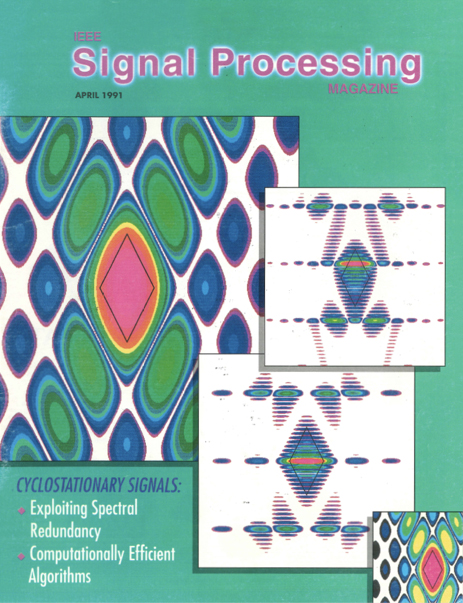

The most widely cited single article introducing the subject of cyclostationarity is entitled “Exploitation of Spectral Redundancy in Cyclostationary Signals” and, as of this writing (2018), was published almost three decades ago (1991) in the IEEE Signal Processing Society’s IEEE Signal Processing Magazine vol. 8 (2), pp. 14-36. According to Google Scholar as of 1 July 2018, this tutorial article has been cited in 1,217 research papers and, as of this update on 19 October 2021,1404 papers. This represents a current 3-year-average growth rate of almost five new citations every month in its 30th year. Based on this evidence, it appears that this introductory article on cyclostationarity has been the most popular among researchers, and visitors to this website are therefore referred to this article for the first recommended reading. The link [JP36] provides free access to the entire special issue.

Fifteen years later, in 2006, the most comprehensive survey of cyclostationarity at that time, entitled “Cyclostationarity: Half a Century of Research”, was published in the European Association for Signal Processing Journal Signal Processing vol. 86 (4), pp. 639-697. According to Google Scholar as of 1 July 2018, this survey had been cited in 740 research papers and, as of this update on 19 October 2021,1042 papers. This represents a current 3-year-average growth rate of almost eight new citations every month in its 15th year. This survey paper received from the Publisher (Elsevier) the “Most Cited Paper Award” in 2008; and, each year since its first appearance online up through 2011, it was the most cited paper among those published in Signal Processing in the previous five years, and among the top 10 most downloaded papers from Signal Processing. Based on this evidence, it appears that this comprehensive survey paper on cyclostationarity has been the most popular among researchers, and visitors to this website are therefore referred to this paper [JP64] for the second recommended reading. However, for new students of this subject, it is recommended that this survey paper not be read thoroughly at this stage; it should just be perused to widen one’s perspective on the scope of this subject as of 2006.

For visitors to this website looking for an introduction to the 2nd order (or wide-sense) theory of cyclostationarity at an intermediate level—more technical than the magazine article cited above but less technical and considerably less comprehensive than the survey paper also cited above—the journal paper entitled “The Spectral Correlation Theory of Cyclostationary Time-Series” [JP15] is recommended. This paper was published in 1986 in the Journal Signal Processing, Vol. 11, pp. 13-36. An indication that this paper was well received is the fact that it was awarded the best paper of the year by the European Association for Signal Processing. In contrast to the 1,217 citations of the magazine article recommended above, this journal paper had been cited in only 351 research papers as of 1 July 2018 but, as of this update on 19 October 2021, its citations have grown to 431, a 3-year rate of two new citations every month in its 35th year. It is suggested here that this lesser popularity more reflects differences in the readerships of the 1991 magazine and this 1986 journal than it reflects the utility of the paper.

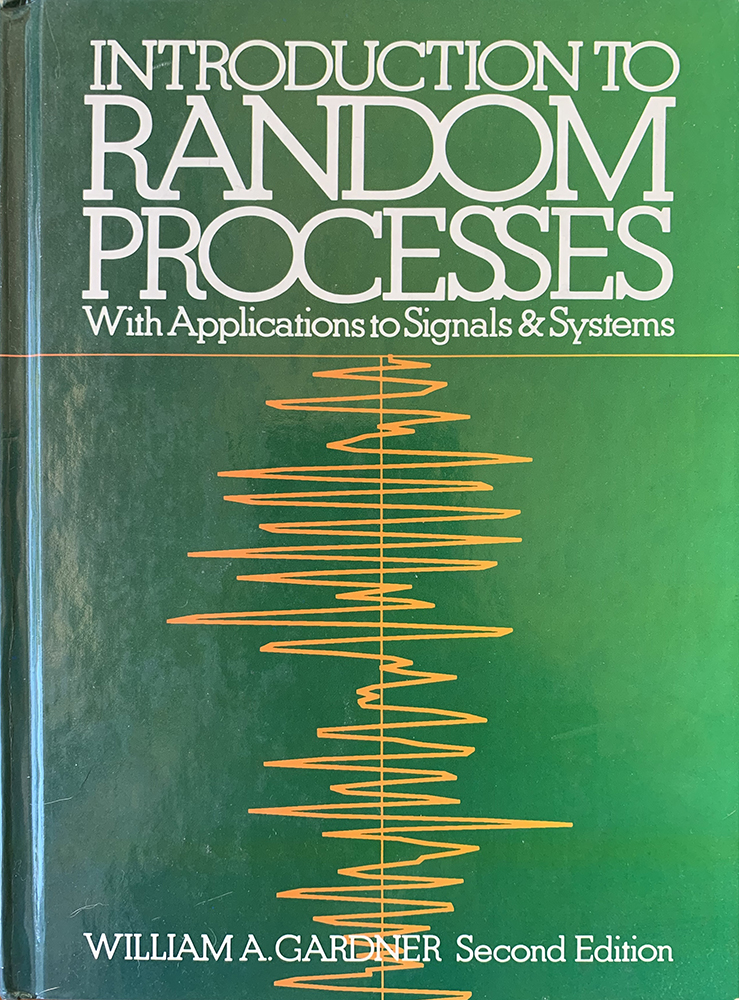

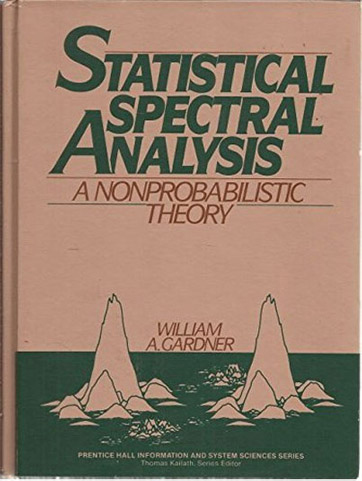

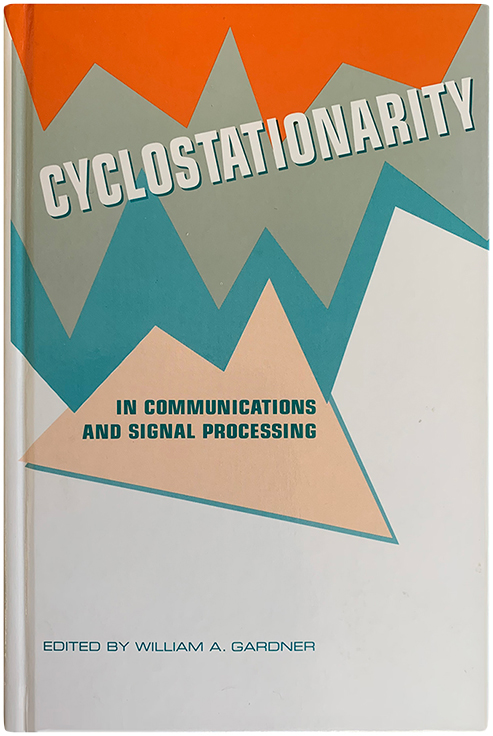

The textbooks/reference-books about cyclostationarity that have been the most frequently cited in research papers as of this writing (2018) and again, as of this update on 19 October 2021, are the following three books which, together, comprise over 1600 pages and have been cited in 3,080 research papers over the last three decades.

One more recommendation for students of cyclostationarity is Chapter 1 in the above cited book [Bk5], which provides an introduction to the subject that is considerably broader and deeper than the introductions in the articles [JP15] and [JP36], also cited above. In fact, in the ensuing 25 years since publication of [Bk5], no other introductions even approaching the breadth and depth of this chapter can be found in the literature.

The above seven recommended readings have been cited in nearly 6000 research papers tracked by Google Scholar. This averages 200 citations per year for 30 years. For emphasis, at the cost of being redundant, it is stated here before moving on that these citation statistics provide the basis for the WCM’s selection, from among all published tutorial treatments of cyclostationarity, of this particular set of seven. It will not go unnoticed that everyone of these recommended readings was written by the WCM. No other treatments that are as popular as these are known to the WCM.

However, as of late 2019, there has been a major step forward in the publication of comprehensive book treatments of cyclostationarity that can be highly recommended for serious students of the subject: Cyclostationary Processes and Time Series: Theory, Applications, and Generalizations, by Professor Antonio Napolitano, the most prolific contributor to this field for two decades now. Besides providing the most comprehensive treatment of the subject—a treatment that is both mathematical and very readable—including both the FOT probability theory and the stochastic probability theory of almost cyclostationary time series or processes and all established generalizations of these (discussed in the following section of this page), this book also is the most scholarly treatment since the seminal book [Bk2]. Being a historian of time-series analysis, I can say with confidence that no other treatment of the history of contributions to the theory of cyclostationarity can compete with this exemplary book. It will be *the* definitive treatment covering the period from the inception of this subject of study to the end of 2019 (more than half a century) for the foreseeable future.

Higher-Order Cyclostationarity

Although the first comprehensive treatment of cyclostationarity focused on the theory of 2nd-order temporal and spectral moments and appeared in 1987 [Bk2], a similarly comprehensive theory of higher-order temporal and spectral moments did not appear for another 7 years: [JP55], [JP56], four years after the first introduction of nth-order spectral moments for cyclostationary time series [B2, Ref. Gardner 1990c]. This theory, which is based on Fraction-of-Time Probability exposed a number of issues with the alternative theory of higher-order moments based on stochastic processes—both stationary and cyclostationary. It also led to the discovery of an entirely new interpretation of cumulants, one which makes no reference to the more abstract stochastic process. Rather, the cumulant is derived as the answer to the question “how does one calculate the strength and phase of a pure-nth-order sine wave from a time series exhibiting cyclostationarity?” The question does not involve classical probability or stochastic processes; yet its answer is “calculate the nth-order cyclic cumulant” which is a function of the cyclic moments in the FOT-Probability Theory. The 2-part paper [JP55], [JP56] is the WCM’s first recommended reading on this subject of higher-order cyclostationarity. The second recommendation is Section 13 of the comprehensive survey paper [JP64], and the third recommendation is Chapter 4 in the encyclopedic book [B2], both of which contain references to diverse sources. Other recommended reading is the book chapter [BkC3] and chap. 2 in the book [Bk5]. According to Google Scholar as of April 2022, the number of citations of [JP55] and [JP56] is 352 and 261, respectively. In comparison with the citations numbers given above on this Page 2, this subject appears to be only about 1/3 as popular as the second-order theory. This likely reflects the smaller number of practical applications of the higher-order theory. Yet, there are some interesting applications where the higher-order theory is essential (see [JP64] and Sec. 4.9 in [B2]).

Relative to the aforementioned three introductory but thorough treatments of cyclostationarity, there is one textbook/reference-book that is highly complementary and can be strongly recommended for advanced study, entitled Generalizations of Cyclostationary Signal Processing: Spectral Analysis and Applications. The generalizations of cyclostationarity introduced in this unique book are summarized on Page 5.

An even more recent development of the cyclostationarity paradigm is its extension to signals that exhibit irregular cyclicity, rather than the regular cyclicity we call cyclostationarity. This extension enables application of cyclostationarity theory and method to time-series data originating in many fields of science where there are cyclic influences on data (observations and measurements on natural systems), but for which the cyclicity is irregular as it typically is in nature. This extension originates in the work presented in the article “Statistically Inferred Time Warping: Extending the Cyclostationarity Paradigm from Regular to Irregular Statistical Cyclicity in Scientific Data,” written in 2016 and published in 2018 [JP65]; see also [J39].

However, from an educational perspective, visitors to this website whose objective is to develop a firm command of not only the mathematical principles and models of cyclostationarity but also the conceptual link between the mathematics and empirical data—a critically important link that enables the user to insightfully design or even just correctly use algorithms for signal processing (time-series data analysis)—are strongly urged to take a temporary detour away from cyclostationarity per se and toward the fundamental question:

“What should be the role of the theory of probability and stochastic processes in the conceptualization of cyclostationarity and even stationarity?”

As discussed in considerable detail on Page 3, one can argue quite convincingly that, from a scientific and engineering perspective, a wrong step was taken back around the middle of the 20th Century in the nascent field of time-series analysis (more frequently referred to as signal processing today) when the temporal counterpart referred to here—introduced by Norbert Wiener in his 1949 book, Extrapolation, Interpolation, and Smoothing of Stationary Time Series, with Engineering Applications—was rejected by mathematicians in favor of Ensemble Statistics, Probability, and Stochastic Processes. This step away from the more concrete conceptualization of statistical signal processing that was emerging and toward a more abstract mathematical model, called a stochastic process, is now so ingrained in what university students are taught today, that few STEM (Science, Technology, Engineering, and Mathematics) professors and practitioners are even aware of the alternative that is, on this website, argued to be superior for the great majority of real-world applications—the only advantage of stochastic processes being their amenability to mathematical proof-making, despite the fact that it is typically impossible to verify that real-world data satisfies the axiomatic assumptions upon which the stochastic process model is based! In essence, the assumptions pave the way for constructing mathematical proofs in the theory of stochastic processes, not—as they should in science—pave the way for validating applicability of theory to real-world applications.

This is an extremely serious mis-step for this important field of study and it parallels a similar egregious mis-step taken early on in the 20th Century when astrophysics and cosmology became dominated by mathematicians who were bent on developing a theory that was particularly mathematically viable, rather than being most consistent with the Scientific Method. This led to wildly abstract models and associated theory (such as black holes, dark matter, dark energy, and the like) that are dominated by the role of the force of Gravity, whereas Electromagnetism has been scientifically demonstrated to play the true central role in the workings of the Universe. As in the case of the firmly established but mistaken belief that stochastic process models for stationary and cyclostationary time-series are the only viable models, the gravity-centric model of the universe, upon which all mainstream astrophysics is based, is so ingrained in what university students have been taught since early in the 20th century, that few professors and mainstream astrophysics practitioners can bring themselves to recognize the alternative electromagnetism-centric model that is strongly argued to be superior in terms of agreeing with empirical data. Interested readers are referred to the major website www.thunderbolts.info, where the page “Beginner’s Guide” is a good place to start.

With this hindsight, this website would be remiss to simply present the subject of cyclostationarity within the framework of stochastic processes, which has unfortunately become the norm. This would be the path of least resistance considering the impact of over half a century of using and teaching the stochastic process theory as if it was the only viable theory, not even mentioning the fact that an alternative exists and actually preceded the stochastic process concept before it was buried by mathematicians behaving as if the scientific method was irrelevant.

FOREWORD

A good deal of our statistical theory, although it is mathematical in nature, originated not in mathematics but in problems of astronomy, geomagnetism and meteorology: examples of fruitful problems in these subjects have included the clustering of stars, also galaxies, on the celestial sphere, tidal analysis, the correlation of fluctuations of the Earth’s magnetic field with other solar-terrestrial effects, and the determination of seasonal variations and climatic trends from weather data. All three of these fields are observational. Great figures of the past, such as C. F. Gauss (1777—1855) (who worked with both astronomical and geomagnetic data, and discovered the method of least square fitting of data, the normal error distribution, and the Fast Fourier Transform algorithm), have worked on observational data analysis and have contributed much to our body of knowledge on time series and randomness.

Much other theory has come from gambling, gunnery, and agricultural research, fields that are experimental. Measurements of the fall of shot on a firing range will reveal a pattern that can be regarded as a sample from a normal distribution in two dimensions, together with whatever bias is imposed by pointing and aiming, the wind, air temperature, atmospheric pressure and Earth rotation. The deterministic part of any one of these influences may be characterized with further precision by further firing tests. In the experimental sciences, as well as in the observational, great names associated with the foundations of statistics and probability also come to mind.

Experimental subjects are traditionally distinguished from observational ones by the property that conditions are under the control of the experimenter. The design of experiments leads the experimenter to the idea of an ensemble, or random process, an abstract probabilistic creation illustrated by the bottomless barrel of well-mixed marbles that is introduced in elementary probability courses. A characteristic feature of the contents of such a barrel is that we know in advance how many marbles there are of each color, because it is we who put them in; thus, a sample set that is withdrawn after stirring must be compatible with the known mix.

The observational situation is quite unlike this. Our knowledge of what is in the barrel, or of what Nature has in store for us, is to be deduced from what has been observed to come out of the barrel, to date. The probability distribution, rather than being a given, is in fact to be intuited from experience. The vital stage of connecting the world of experience to the different world of conventional probability theory may be glossed over when foreknowledge of the barrel and its contents — a probabilistic model — are posited as a point of departure. Many experimental situations are like this observational one.

The theory of signal processing, as it has developed in electrical and electronics engineering, leans heavily toward the random process, defined in terms of probability distributions applicable to ensembles of sample signal waveforms. But many students who are adept at the useful mathematical techniques of the probabilistic approach and quite at home with joint probability distributions are unable to make even a rough drawing of the underlying sample waveforms. The idea that the sample waveforms are the deterministic quantities being modeled somehow seems to get lost.

When we examine the pattern of fall of shot from a gun, or the pattern of bullet holes in a target made by firing from a rifle clamped in a vise, the distribution can be characterized by its measurable centroid and second moments or other spread parameters. While such a pattern is necessarily discrete, and never much like a normal distribution, we have been taught to picture the pattern as a sample from an infinite ensemble of such patterns; from this point of view the pattern will of course be compatible with the adopted parent population, as with the marbles. In this probabilistic approach, to simplify mathematical discussion, one begins with a model, or specification of the continuous probability distribution from which each sample is supposed to be drawn. Although this probability distribution is not known, one is comforted by the assurance that it is potentially approachable by expenditure of more ammunition. But in fact it is not.

The assumption of randomness is an expression of ignorance. Progress means the identification of systematic effects which, taken as a whole, may initially give the appearance of randomness or unpredictability. Continuing to fire at the target on a rifle range will not refine the probability distribution currently in use but will reveal, to a sufficiently astute planner of experiments, that air temperature, for example, has a determinate effect which was always present but was previously accepted as stochastic. After measurement, to appropriate precision, temperature may be allowed for. Then a new probability model may be constructed to cover the effects that remain unpredictable.

Many authors have been troubled by the standard information theory approach via the random process or probability distribution because it seems to put the cart before the horse. Some sample parameters such as mean amplitudes or powers, mean durations and variances may be known, to precision of measurement, but if we are to go beyond pure mathematical deduction and make advances in the realm of phenomena, theory should start from the data. To do otherwise risks failure to discover that which is not built into the model. Estimating the magnitude of an earthquake from seismograms, assessing a stress-test cardiogram, or the pollutant in a stormwater drain, are typical exercises where noise, systematic or random, is to be fought against. Problems on the forefront of development are often ones where the probability distributions of neither signal nor noise is known; and such distributions may be essentially unknowable because repetition is impossible. Thus, any account of measurement, data processing, and interpretation of data that is restricted to probabilistic models leaves something to be desired.

The techniques used in actual research with real data do not loom large in courses in probability. Professor Gardner’s book demonstrates a consistent approach from data, those things which in fact are given, and shows that analysis need not proceed from assumed probability distributions or random processes. This is a healthy approach and one that can be recommended to any reader.

Ronald N. Bracewell

Stanford, California

PREFACE

This book grew out of an enlightening discovery I made a few years ago, as a result of a long-term attempt to strengthen the tenuous conceptual link between the abstract probabilistic theory of cyclostationary stochastic processes and empirical methods of signal processing that accommodate or exploit periodicity in random data. After a period of unsatisfactory progress toward using the concept of ergodicity1 to strengthen this link, it occurred to me (perhaps wishfully) that the abstraction of the probabilistic framework of the theory might not be necessary. As a first step in pursuing this idea, I set out to clarify for myself the extent to which the probabilistic framework is needed to explain various well-known concepts and methods in the theory of stationary stochastic processes, especially spectral analysis theory. To my surprise, I discovered that all the concepts and methods of empirical spectral analysis can be explained in a more straightforward fashion in terms of a deterministic theory, that is, a theory based on time-averages of a single time-series rather than ensemble-averages of hypothetical random samples from an abstract probabilistic model. To be more specific, I found that the fundamental concepts and methods of empirical spectral analysis can be explained without use of probability calculus or the concept of probability and that probability calculus, which is indeed useful for quantification of the notion of degree of randomness or variability, can be based on time-averages of a single time-series without any use of the concept or theory of a stochastic process defined on an abstract probability space. This seemed to be of such fundamental importance for practicing engineers and scientists and so intuitively satisfying that I felt it must already be in the literature.

To put my discovery in perspective, I became a student of the history of the subject. I found that the apparent present-day complacence with the abstraction of the probabilistic theory of stochastic processes, introduced by A. N. Kolmogorov in 1941, has been the trend for about 40 years (as of 1985). Nevertheless, I found also that many probabilists throughout this period, including Kolmogorov himself, have felt that the concept of randomness should be defined as directly as possible, and that from this standpoint it seems artificial to conceive of a time-series as a sample of a stochastic process. (The first notable attempt to set up the probability calculus more directly was the theory of Collectives introduced by Von Mises in 1919; the mathematical development of such alternative approaches is traced by P. R. Masani [Masani 1979].) In the engineering literature, I found that in the early 1960s two writers, D. G. Brennan [Brennan 1961] and E. M. Hofstetter [Hofstetter 1964], had made notable efforts to explain that much of the theory of stationary time-series need not be based on the abstract probabilistic theory of stochastic processes and then linked with empirical method only through the abstract concept of ergodicity, but rather that a probabilistic theory based directly on time-averages will suffice; however, they did not pursue the idea that a theory of empirical spectral analysis can be developed without any use of probability. Similarly, the more recent book by D. R. Brillinger on time-series [Brillinger 1975] briefly explains precisely how the probabilistic theory of stationary time-series can be based on time-averages, but it develops the theory of empirical spectral analysis entirely within the probabilistic framework. Likewise, the early engineering book by R. B. Blackman and J. W. Tukey [Blackman and Tukey 1958] on spectral analysis defines an idealized spectrum in terms of time-averages but then carries out all analysis of measurement techniques within the probabilistic framework of stochastic processes. In the face of this 40-year trend, I was perplexed to find that the one most profound and influential work in the entire history of the subject of empirical spectral analysis, Norbert Wiener’s Generalized Harmonic Analysis, written in 1930 [Wiener 1930], was entirely devoid of probability theory; and yet I found only one book written since then for engineers or scientists that provides more than a brief mention of Wiener’s deterministic theory. All other such books that I found emphasize the probabilistic theory of A. N. Kolmogorov usually to the complete exclusion of Wiener’s deterministic theory. This one book was written by a close friend and colleague of Wiener’s, Y. W. Lee, in 1960 [Lee 1960]. Some explanation of this apparent historical anomaly is given by P. R. Masani in his recent commentary on Wiener’s Generalized Harmonic Analysis [Masani 1979]: “The quick appearance of the Birkhoff ergodic theorem and the Kolmogorov theory of stochastic processes after the publication of Wiener’s Generalized Harmonic Analysis created an intellectual climate favoring stochastic analysis rather than generalized harmonic analysis.” But Masani goes on to explain that the current opinion, that Wiener’s 1930 memoir [Wiener 1930] marks the culmination of generalized harmonic analysis and its supersession by the more advanced theories of stochastic processes, is questionable on several counts, and he states that the “integrity and wisdom” in the attitude expressed in the early 1960s by Kolmogorov suggesting a possible return to the ideas of Von Mises “. . . should point the way toward the future. Side by side with the vigorous pursuit of the theory of stochastic processes, must coexist a more direct process-free [deterministic] inquiry of randomness of different classes of functions.” In an even stronger stance, T. L. Fine in the concluding section of his book Theories of Probability [Fine, 1973] states “Judging from the present confused status of probability theory, the time is at hand for those concerned about the characterization of chance and uncertainty and the design of inference and decision-making systems to reconsider their long-standing dependence on the traditional statistical and probabilistic methodology. . . Why not ignore the complicated and hard to justify probability-statistics structure and proceed ‘directly’ to those, perhaps qualitative, assumptions that characterize our source of random phenomena, the means at our disposal, and our task?”

As a result of my discovery and my newly gained historical perspective, I felt compelled to write a book that would have the same goals, in principle, as many existing books on spectral analysis—to present a general theory and methodology for empirical spectral analysis—but that would present a more relevant and palatable (for many applications) deterministic theory following Wiener’s original approach rather than the conventional probabilistic theory. As the book developed, I continued to wonder about the apparent fact that no one in the 50 years (as of 1985) since Wiener’s memoir had considered such a project worthy enough to pursue. However, as I continued to search the literature, I found that one writer, J. Kampé de Fériet. did make some progress along these lines in a tutorial paper [Kampé de Fériet 1954], and other authors have contributed to development of deterministic theories of related subjects in time-series analysis, such as linear prediction and extrapolation [Wold 1948], [Finch 1969], [Fine 1970]. Furthermore, as the book progressed and I observed the favorable reactions of my students and colleagues, my conviction grew to the point that I am now convinced that it is generally beneficial for students of the subject of empirical spectral analysis to study the deterministic theory before studying the more abstract probabilistic theory.

When I had completed most of the development for a book on a deterministic theory of empirical spectral analysis of stationary time-series, I was then able to return to the original project of presenting the results of my research work on cyclostationary time-series but within a nonprobabilistic framework. Once I started, it quickly became apparent that I was able to conceptualize intuitions, hunches, conjectures, and so forth far more clearly than before when I was laboring within the probabilistic framework. The original relatively fragmented research results on cyclostationary stochastic processes rapidly grew into a comprehensive theory of random time-series from periodic phenomena that is every bit as satisfying as the theory of random time-series from constant phenomena (stationary time-series) and is even richer. This theory, which brings to light the fundamental role played by spectral correlation in the study of periodic phenomena, is presented in Part II.

Part I of this book is intended to serve as both a graduate-level textbook and a technical reference. The only prerequisite is an introductory course on Fourier analysis. However, some prior exposure to probability would be helpful for Section B in Chapter 5 and Section A in Chapter 15. The body of the text in Part I presents a thorough development of fundamental concepts and results in the theory of statistical spectral analysis of empirical time-series from constant phenomena, and a brief overview is given at the end of Chapter 1. Various supplements that expand on topics that are in themselves important or at least illustrative but that are not essential to the foundation and framework of the theory, are included in appendices and exercises at the ends of chapters.

Part II of this book, like Part I, is intended to serve as both textbook and reference, and the same unifying philosophical framework developed in Part I is used in Part II. However, unlike Part I, the majority of concepts and results presented in Part II are new. Because of the novelty of this material, a brief preview is given in the Introduction to Part II. The only prerequisite for Part II is Part I.

The focus in this book is on fundamental concepts, analytical techniques. and basic empirical methods. In order to maintain a smooth flow of thought in the development and presentation of concepts that steadily build on one another, various derivations and proofs are omitted from the text proper, and are put into the exercises, which include detailed hints and outlines of solution approaches. Depending on students’ background, instructors can either assign these as homework exercises, or present them in the lectures. Because the treatment of experimental design and applications is brief and is also relegated to the exercises and concise appendices, some readers might desire supplements on these topics.

===============

1 Ergodicity is the property of a mathematical model for an infinite set of time-series that guarantees that an ensemble average over the infinite set will equal an infinite time average over one member of the set.

REFERENCES

BLACKMAN, R. B. and J, W. TUKEY. 1958. The Measurement of Power Spectra. New York: American Telephone and Telegraph Co.

BRENNAN, D. G. 1961. Probability theory in communication system engineering, Chapter 2 in Communication System Theory. Ed. E. J. Baghdady, New York: McGraw-Hill.

BRILLINGER, D. R. 1975. Time Series. New York: Holt, Rinehart and Winston.

FINCH, P. D. 1969. Linear least squares prediction in non-stochastic time-series. Advances in Applied Prob. 1:111—22.

FINE, T. L. 1970. Extrapolation when very little is known about the source. Information and Control. 16:33 1—359.

FINE, T. L. 1973. Theories of Probability: An Examination of Foundations. New York: Academic Press.

HOFSTETTER, E. M. 1964. Random processes. Chapter 3 in The Mathematics of Physics and Chemistry, vol. 11. Ed. H. Margenau and G. M. Murphy. Princeton, N.J.: D. Van Nostrand Co.

KAMPÉ DE FÉRIET, J. 1954. Introduction to the statistical theory of turbulence. I and 11. J. Soc. Indust. Appl. Math. 2, Nos. I and 3:1—9 and 143—174.

LEE, Y. W. 1960. Statistical Theory of Communication. New York: John Wiley & Sons.

MASANI, P. R. 1979. “Commentary on the memoir on generalized harmonic analysis.” pp. 333—379 in Norbert Wiener: Collected Works, Volume II. Cambridge. Mass.: Massachusetts Institute of Technology.

WIENER, N. 1930. Generalized harmonic analysis. Acta Mathematika. 55:117—258.

WOLD, H. O. A. 1948. On prediction in stationary time-series. Annals of Math Stat. 19:558—567.

William A. Gardner

INTRODUCTION

The subject of Part I is the statistical spectral analysis of empirical time-series. The term empirical indicates that the time-series represents data from a physical phenomenon; the term spectral analysis denotes decomposition of the time-series into sine wave components; and the term statistical indicates that the squared magnitude of each measured or computed sine wave component, or the product of pairs of such components, is averaged to reduce random effects in the data that mask the spectral characteristics of the phenomenon under study. The purpose of Part I is to present a comprehensive deterministic theory of statistical spectral analysis and thereby to show that contrary to popular belief, the theoretical foundations of this subject need not be based on probabilistic concepts. The motivation for Part I is that for many applications the conceptual gap between practice and the deterministic theory presented herein is narrower and thus easier to bridge than is the conceptual gap between practice and the more abstract probabilistic theory. Nevertheless, probabilistic concepts are not ignored. A means for obtaining probabilistic interpretations of the deterministic theory is developed in terms of fraction-of-time distributions, and ensemble averages are occasionally discussed.

A few words about the terminology used are in order. Although the terms statistical and probabilistic are used by many as if they were synonymous, their meanings are quite distinct. According to the Oxford English Dictionary, statistical means nothing more than “consisting of or founded on collections of numerical facts”. Therefore, an average of a collection of spectra is a statistical spectrum. And this has nothing to do with probability. Thus, there is nothing contradictory in the notion of a deterministic or non-probabilistic theory of statistical spectral analysis. (An interesting discussion of variations in usage of the term statistical is given in Comparative Statistical Inference by V. Barnett [Barnett 1973]). The term deterministic is used here as it is commonly used, as a synonym for non-probabilistic. Nevertheless, the reader should be forewarned that the elements of the non-probabilistic theory presented herein are defined by infinite limits of time averages and are therefore no more deterministic in practice than are the elements of the probabilistic theory. (In mathematics, the deterministic and probabilistic theories referred to herein are sometimes called the functional and stochastic theories, respectively.) The term random is often taken as an implication of an underlying probabilistic model. But in this book, the term is used in its broader sense to denote nothing more than the vague notion of erratic unpredictable behavior.

This introductory chapter sets the stage for the in-depth study of spectral analysis taken up in the following chapters by explaining objectives and motives, answering some basic questions about the nature and uses of spectral analysis, and establishing a historical perspective on the subject.

A premise of this book is that the way engineers and scientists are commonly taught to think about empirical statistical spectral analysis of time-series data is fundamentally inappropriate for many applications—maybe even most. The essence of the subject is not really as abstruse as it appears to be from what has become the conventional point of view. The problem is that the subject has been imbedded in the abstract probabilistic framework of stochastic processes, and this abstraction impedes conceptualization of the fundamental principles of empirical statistical spectral analysis. To circumvent this artificial conceptual complication, the probabilistic theory of statistical spectral analysis should be taught to engineers and scientists only after they have learned the fundamental deterministic principles—both qualitative and quantitative. For example, one should first learn 1) when and why sine wave analysis of time-series is appropriate, 2) how and why temporal and spectral resolution interact, 3) why statistical (averaged) spectra are of interest, and 4) what the various methods for measuring and computing statistical spectra are and how they are related. One should also learn 5) how simultaneously to control the spectral and temporal resolution and the degree of randomness (reliability) of a statistical spectrum. All this can be accomplished in a non-superficial way without reference to the probabilistic theory of stochastic processes.

The concept of a deterministic theory of statistical spectral analysis is not new. Much deterministic theory was developed prior to and after the infusion, beginning in the 1930s, of probabilistic concepts into the field of time-series analysis. The most fundamental concept underlying present-day theory of statistical spectral analysis is the concept of an ideal spectrum, and the primary objective of statistical spectral analysis is to estimate the ideal spectrum using a finite amount of data. The first theory to introduce the concept of an ideal spectrum is Norbert Wiener’s theory of generalized harmonic analysis [Wiener 1930], and this theory is deterministic. Later, Joseph Kampé de Fériet presented a deterministic theory of statistical spectral analysis that ties Wiener’s theory more closely to the empirical reality of finite-length time-series [Kampé de Fériet 1954]. But the very great majority of treatments in the ensuing 30 years consider only probabilistic theory of statistical spectral analysis that is based on the use of stochastic process models of time functions, although a few authors do briefly mention the dual deterministic theory (e.g., [Koopmans 1974; Brillinger 1976]).

The primary objective of Part I of this book is to adopt the deterministic viewpoint of Wiener and Kampé de Fériet and show that a comprehensive deterministic theory of statistical spectral analysis, which for many applications relates more directly to empirical reality than does its more popular probabilistic counterpart based on stochastic processes, can be developed. A secondary objective of Part I is to adopt the empirical viewpoint of Donald G. Brennan [Brennan 1961] and Edward M. Hofstetter [Hofstetter 1964], from which they develop an objective probabilistic theory of stationary random processes based on fraction-of-time distributions, and show that probability theory can be applied to the deterministic theory of statistical spectral analysis without introducing the more abstract mathematical model of empirical reality based on the axiomatic or subjective probabilistic theory of stochastic processes. This can be interpreted as an exploitation of Herman O. A. Wold’s isomorphism between an empirical time-series and a probabilistic model of a stationary stochastic process. As explained below in Section B, this isomorphism is constructed by defining the ensemble, upon which the probabilistic theory of time functions is based, to be the set of all time-translated versions of a single function of time—the ensemble generator—and it is responsible for the duality between probabilistic (ensemble-average) and deterministic (time-average) theories of time-series [Wold 1948] [Gardner 1985]. Moreover, the excuse generally offered for adopting a stochastic process model when it is admitted that it is time averages, not ensemble averages, that are of interest in practice is to carelessly assume that the stochastic process is ergodic (an even more abstract concept), in which case time-averages converge to ensemble averages—a result typically presented to students as magic; what is not generally mentioned (and probably rarely even recognized by instructors) is that assuming ergodicity is tantamount to assuming the ensemble is (with probability equal to one) simply the collection of all time-translated versions of a single time function. Thus, the whole exercise of abandoning the more straightforward fraction-of-time probabilistic model in favor of the abstract stochastic process model is all for naught. So why drag our students through this silly exercise that is bound to serve no purpose other than to confuse them, especially given that the truth about all this presented here is essentially never revealed to the student.

There are two motives for Part I of this book. The first is to stimulate a reassessment of the way engineers and scientists are today, evidently exclusively, taught to think about statistical spectral analysis by showing that probability theory need not play a primary role. The second motive is to pave the way for introducing a new theory and methodology for statistical spectral analysis of random data from periodically time-variant phenomena, which is presented in Part II. The fact that this new theory and methodology, which unifies various emerging—as well as long-established—time-series analysis concepts and techniques, is most transparent when built on the foundation of the deterministic theory developed in Part I is additional testimony that probability theory need not play a primary role in statistical spectral analysis.

The book, although concise, is tutorial and is intended to be comprehensible by graduate students and professionals in engineering, science, mathematics, and statistics. The accomplishments of the book should be appreciated most by those who have studied statistical spectral analysis in terms of the popular probabilistic theory and have struggled to bridge the conceptual gaps between this abstract theory and empirical reality.

Spectral analysis of functions is used for solving a wide variety of practical problems encountered by engineers and scientists in nearly every field of engineering and science. The functions of primary interest in most fields involving data analysis are temporal or spatial waveforms or discrete sequences of numbers. The most basic purpose of spectral analysis is to represent a function by a sum of weighted sinusoidal functions called spectral components; that is, the purpose is to decompose (analyze) a function into these spectral components. The weighting function in the decomposition is a density of spectral components. This spectral density is also called a spectrum1. The reason for representing a function by its spectrum is that the spectrum can be an efficient, convenient, and often revealing description of the function.

As an example of the use of spectral representation of temporal waveforms in the field of signal processing, consider the signal extraction problem of extracting an information-bearing signal from corrupted (noisy) measurements. In many situations, the spectrum of the signal differs substantially from the spectrum of the noise. For example, the noise might have more high-frequency content; hence, the technique of spectral filtering can be used to attenuate the noise while leaving the signal intact. Another example is the data-compression problem of using coding to compress the amount of data used to represent information for the purpose of efficient storage or transmission. In many situations, the information contained in a complex temporal waveform (e.g., a speech segment) can be coded more efficiently in terms of the spectrum.

There are two types of spectral representations. The more elementary of the two shall be referred to as simply the spectrum, and the other shall be referred to as the statistical spectrum. The term statistical indicates that averaging or smoothing is used to reduce random effects in the data that mask the spectral characteristics of the phenomenon under study. For time-functions, the spectrum is obtained from an invertible transformation from a time-domain description of a function, , to a frequency-domain description, or more generally to a joint time- and frequency-domain description. The (complex) spectrum of a segment of data of length

centered at time

and evaluated at frequency

is

(1)

for which . Because of the invertibility of this transformation, a function can be recovered from its spectrum,

(2)

In contrast to this, a statistical spectrum involves a magnitude-extraction operation that is not invertible followed by an averaging or smoothing operation. For example, the statistical spectrum

(3)

is obtained from the normalized squared magnitude spectrum

(4)

followed by a temporal smoothing operation. Thus, a statistical spectrum is a summary description of a function from which the function cannot be recovered. Therefore, although the spectrum is useful for both signal extraction and data compression, the statistical spectrum is not directly useful for either. It is, however, quite useful indirectly for analysis, design, and adaptation of schemes for signal extraction and data compression. It is also useful for forecasting or prediction and more directly for other signal-processing tasks such as 1) the modeling and system-identification problems of determining the characteristics of a system from measurements on it, such as its response to excitation, and 2) decision problems, such as the signal-detection problem of detecting the presence of a signal buried in noise. As a matter of fact, the problem of detecting hidden periodicities in random data motivated the earliest work in the development of spectral analysis, as discussed in Section D below.

Statistical spectral analysis has diverse applications in areas such as mechanical vibrations, acoustics, speech, communications, radar, sonar, ultrasonics, optics, astronomy, meteorology, oceanography, geophysics, economics, biomedicine, and many other areas. To be more specific, let us briefly consider a few applications. Spectral analysis is used to characterize various signal sources. For example, the spectral purity of a sine wave source (oscillator) is determined by measuring the amounts of harmonics from distortion due, for example, to nonlinear effects in the oscillator and also by measuring the spectral content close in to the fundamental frequency of the oscillator, which is due to random phase noise. Also, the study of modulation and coding of sine wave carrier signals and pulse-train signals for communications, telemetry, radar, and sonar employs spectral analysis as a fundamental tool, as do surveillance systems that must detect and identify modulated and coded signals in a noisy environment. Spectral analysis of the response of electrical networks and components such as amplifiers to both sine wave and random-noise excitation is used to measure various properties such as nonlinear distortion, rejection of unwanted components, such as power-supply components and common-mode components at the inputs of differential amplifiers, and the characteristics of filters, such as center frequencies, bandwidths, pass-band ripple, and stop-band rejection. Similarly, spectral analysis is used to study the magnitude and phase characteristics of the transfer functions as well as nonlinear distortion of various electrical, mechanical, and other systems, including loudspeakers, communication channels and modems (modulator-demodulators), and magnetic tape recorders in which variations in tape motion introduce signal distortions. In the monitoring and diagnosis of rotating machinery, spectral analysis is used to characterize random vibration patterns that result from wear and damage that cause imbalances. Also, structural analysis of physical systems such as aircraft and other vehicles employs spectral analysis of vibrational response to random excitation to identify natural modes of vibration (resonances). In the study of natural phenomena such as weather and the behavior of wildlife and fisheries populations, the problem of identifying cause-effect relationships is attacked using techniques of spectral analysis. Various physical theories are developed with the assistance of spectral analysis, for example, in studies of atmospheric turbulence and undersea acoustical propagation. In various fields of endeavor involving large, complex systems such as economics, spectral analysis is used in fitting models to time-series for several purposes, such as simulation and forecasting. As might be surmised from this sampling of applications, the techniques of spectral analysis permeate nearly every field of science and of engineering.

Spectral analysis applies to both continuous-time functions, called waveforms, and discrete-time functions, called sampled data. Other terms are commonly used also; for example, the terms data and time-series are each used for both continuous-time and discrete-time functions. Since the great majority of data sources are continuous-time phenomena, continuous-time data are focused on in this book, because an important objective is to maintain a close tie between theory and empirical reality. Furthermore, since optical technology has emerged as a new frontier in signal processing and optical quantities vary continuously in time and space, this focus on continuous time data is well suited to upcoming technological developments. Nevertheless, since some of the most economical implementations of spectrum analyzers and many of the newly emerging parametric methods of spectral analysis operate with discrete time and discrete frequency and since some data are available only in discrete form, discrete-time and discrete-frequency methods also are described.

===============

1 The term spectrum, which derives from the Latin for image, was originally introduced by Sir Isaac Newton (see [Robinson 1982]).

The primary reason why sinewaves are especially appropriate components with which to analyze waveforms is our preoccupation with convolutions of time series with the kernels (impulse-response functions) of linear time-invariant (LTI) transformations, which we often call filters. A secondary reason why statistical (time-averaged) analysis into sinewave components is especially appropriate is our preoccupation with time-invariant phenomena (data sources). To be specific, a transformation of a waveform into another waveform, say

, is an LTI transformation if and only if there exists a weighting function

(here assumed to be absolutely integrable in the generalized sense, which accommodates Dirac deltas) such that

is the convolution (denoted by

) of

with

:

(5)

The time-invariance property of a transformation is, more precisely, a translation- invariance property that guarantees that a translation, by , of

to

has no effect on

other than a corresponding translation to

(exercise 1). A phenomenon is said to be time-invariant only if it is persistent in the sense that it is appropriate to conceive of a mathematical model of

for which the following limit time-average exists for each value of

and is not identically zero,3

(6)

This function is called the limit autocorrelation function4 for . For

, (6) is simply the time-averaged value of the instantaneous power.5

Sinewave analysis is especially appropriate for studying a convolution because the principal components (eigenfunctions) of the convolution operator are the complex sinewave functions, for all real values of

. This follows from the facts that (1) the convolution operation produces a continuous linear combination of time-translates, that is,

is a weighted sum (over

) of

, and (2) the complex sinewave is the only bounded function whose form is invariant (except for a scale factor) to time-translation, that is, a bounded function

satisfies

(7)

for all if and only if

(8)

for some complex and real

(exercise 3). As a consequence, the form of a bounded function

is invariant to all convolutions if and only if

, in which case (5) yields

(9)

for which

(10)

This fact can be exploited in the study of convolution by decomposing a waveform into a continuous linear combination of sinewaves, 6

(11)

with weighting function

(12)

because then substitution of (11) into (5) yields

(13)

for which

(14)

Thus, any particular sinewave component in , say

(15)

can be determined solely from the corresponding sinewave component in , since (14) and (15) yield

(16)

The scale factor is the eigenvalue associated with the eigenfunction

of the convolution operator. Transformations (11) and (12) are the Fourier transform and its inverse, abbreviated by

Statistical (time-averaged) analysis of waveforms into sinewave components is especially appropriate for time-invariant phenomena because an ideal statistical spectrum, in which all random effects have been averaged out, exists if and only if the limit autocorrelation (6) exists. Specifically, it is shown in Chapter 3 that the ideal statistical spectrum obtained from (3) by smoothing over all time,

exists if and only if the limit autocorrelation exists. Moreover, this ideal statistical spectrum can be characterized in terms of the Fourier transform of

, denoted by

(17)

Specifically,

(18)

for which is the unit-area sinc-squared function with width parameter

,

(19)

As the time-interval of spectral analysis is made large, we obtain (in the limit)

(20)

because the limit of is the Dirac delta

(21)

and convolution of a function with the Dirac delta as in (18) leaves the function unaltered (exercise 2). The ideal statistical spectrum defined by (20) is called the limit spectrum. It is worth emphasizing here that it is conceptually misleading to define the limit spectrum (also called the power spectral density) in terms of the limit autocorrelation using (17), as is unfortunately done in many text books. The meaning of the limit spectrum comes from (20), which is its appropriate definition. The equation (17) is simply a characterization of the limit spectrum.

Before leaving this topic of justifying the focus on sinewave components for time-series analysis, it is instructive (especially for the reader with a background in stochastic processes) to consider how the justification must be modified if we are interested in probabilistic (ensemble-averaged) statistical spectra rather than deterministic (time-averaged) statistical spectra. Let us therefore consider an ensemble of random samples of waveforms , indexed by

; for convenience in the ensuing heuristic argument, let us assume that the ensemble is a continuous ordered set for which the ensemble index,

, can be any real number. For each member

of the ensemble, we can obtain an analysis into principal components (sinewave components). A characteristic property of a set of principal components is that they are mutually uncorrelated 7 in the sense that

(22)

where * denotes complex conjugation (exercise 5). But in the probabilistic theory, it is required that the principal components be uncorrelated over the ensemble8

(23)

as well as uncorrelated over time in order to obtain the desired simplicity in the study of time-series subjected to LTI transformations. If we proceed formally by substitution of the principal component,

(24)

into (23), we obtain9 (after reversing the order of the limit operation and the two integration operations)

(25)

for which the function is the probabilistic autocorrelation defined by

(26)

It can be shown (exercise 6) that (23) is valid if and only if

(27)

for all translations , in which case

depends on only the difference of its two arguments,

(28)

Consequently principal-component methods of study of an LTI transformation of an ensemble of waveforms are applicable if and only if the correlation of the ensemble is translation invariant. Such an ensemble of random samples of waveforms is commonly said to have arisen from a wide-sense stationary stochastic process.10 But we must ask if ensembles with translation-invariant correlations are of interest in practice. As a matter of fact, they are for precisely the same reason that translation-invariant linear transformations are of practical interest. The reason is a preoccupation with time-invariance. That is, the ensemble of waveforms generated by some phenomenon will exhibit a translation-invariant correlation if and only if the data-generating mechanism of the phenomenon exhibits appropriate time-invariance. Such time-invariance typically results from a stable system being in a steady-state mode of operation—a statistical equilibrium. The ultimate in time-invariance of a data-generating mechanism is characterized by a translation-invariant ensemble, which is an ensemble for which the identity

(29)

holds for all and all real

; that is, each translation by, for instance,

of each ensemble member, such as

, yields another ensemble member, for example,

. This time-invariance property (29) is more than sufficient for the desired time-invariance property (27). An ensemble that exhibits property (29) shall be said to have arisen from a strict-sense stationary stochastic process. For many applications, a natural way in which a translation-invariant ensemble would arise as a mathematical model is if the ensemble actually generated by the physical phenomenon is artificially supplemented with all translated versions of the members of the actual ensemble. In many situations, the most intuitively pleasing actual ensemble consists of one and only one waveform,

, which shall be called the ensemble generator. In this case, the supplemented ensemble is defined by

(30)

The way in which a probabilistic model can, in principle, be derived from this ensemble is explained in Chapter 5, Section B. This most intuitively pleasing translation-invariant ensemble shall be said to have arisen from an ergodic11 stationary stochastic process. Ergodicity is the property that guarantees equality between time-averages, such as (22), and ensemble-averages, such as (23). The ergodic relation (30) is known as Herman O. A. Wold’s isomorphism between an individual time-series and a stationary stochastic process [Wold 1948].

In summary, statistical sinewave analysis—spectral analysis as we shall call it—is especially appropriate in principle if we are interested in studying linear time-invariant transformations of data and data from time-invariant phenomena. Nevertheless, in practice, statistical spectral analysis can be used to advantage for slowly time-variant linear transformations and for data from slowly time-variant phenomena (as explained in Chapter 8) and in other special cases, such as periodic time-variation (as explained in Part II) and the study of the departure of transformations from linearity (as explained in Chapter 7).

Fundamental Empirically Intuitive vs. Superficial Expedient Explanations of PSD for Stationary Ergodic Processes –

To explicitly elucidate the difference in character between the treatment from the 1987 book [Bk2] given here–which is fundamental and intuitive and based on empirical concepts–and that given in the great majority of other textbooks on this subject–which are typically superficial or abstract/mathematical—the following brief concluding remark has been added (in 2020) to this Section 2.3, which otherwise is taken almost word-for-word from [Bk2].

Essentially all treatments of the concepts of what are here, taken from Section B.2 of Chapter 1 of [Bk2], called the limit spectrum, stationarity, and ergodicity throughout the vast signal processing, engineering, statistics, and mathematics literature, as well as a great deal of the physics literature, simply *define* the limit spectrum—typically called the power spectral density (PSD)—using (17) and (6), or much more frequently the probabilistic counterpart of (6), which is (26)—typically represented by the abstract expectation operation, . In contrast, the treatment here provides the fundamental definition (20) in terms of quantities with concrete empirical meaning given in the presentation preceding (20). This treatment here also explains that the PSD is an abbreviation for the explicit terminology spectral density of time-averaged instantaneous power or, for the probabilistic counterpart, spectral density of expected instantaneous power). Similarly, typical treatments in the literature provide no fundamental empirically-based conceptual origin of the stationarity and ergodicity properties such as those given here; rather, these properties are usually just posited as mathematical assumptions such as (28) and equality between (6) and (28) with

.

Those university professors who have accepted the crucial responsibility of helping the World’s future graduate-level teachers and academic & industrial researchers to grasp the fundamental concepts permeating science and engineering, such as those associated with the power spectral density function addressed here in Section B.2, and yet make the common choice of textbooks that present the expedient but superficial abstract versions of concepts identified here instead of the concrete empirically-based versions of these concepts, are shirking their responsibility. It is depressing to see how widespread such irresponsible behavior by those entrusted with the education of our future generations of engineers and scientists is.

Another Perspective

Basic science is built upon the analysis of data derived from observation, experimentation, and measurement (see Forward). In the various fields of science, this data analysis often takes the form of spectral analysis for a variety of physical reasons. The following brief review of spectral terminology used throughout the sciences reveals how ubiquitous spectral analysis is in the sciences.

There are about 10 variations on the base word Spectrum, all relating to the same concept described above in this Section B—namely the set of strengths of the sinewave components into which a function of time can be decomposed via the procedure of spectral analysis. Here are the traditional definitions of all these various terms.

In the 17th century, the word spectrum was introduced into optics by Isaac Newton, referring to the range of colors observed when white light is dispersed through a prism. Before long, the term was adopted to referred to a plot of light intensity or power as a function of frequency or wavelength, also known as a spectral density plot.

The uses of the term spectrum expanded to apply to other waves, such as sound waves that could also be measured as a function of frequency, and the additional terms frequency spectrum and power spectrum of a signal were adopted. The spectrum concept now applies to any signal that can be measured or decomposed along a continuous variable such as energy in electron spectroscopy or mass-to-charge ratio in mass spectrometry.

The absorption spectrum of a chemical element or chemical compound is the spectrum of frequencies or wavelengths of incident radiation that are absorbed by the compound due to electron transitions from a lower to a higher energy state. The emission spectrum refers to the spectrum of radiation emitted by the compound due to electron transitions from a higher to a lower energy state. (The energy of radiation is proportional to the sinewave frequency of radiation; the proportionality factor is Planck’s constant.)

In astronomical spectroscopy, the strength, shape, and position of absorption and emission lines, as well as the overall spectral energy distribution of the continuum, reveal many properties of astronomical objects. Stellar classification is the categorization of stars based on their characteristic electromagnetic spectra. The spectral flux density is used to represent the spectrum of a light-source, such as a star.

In physics, the energy spectrum (not to be confused with energy spectral density) of a particle is the number of particles or intensity of a particle beam as a function of particle energy. Examples of techniques that produce an energy spectrum are alpha-particle spectroscopy, electron energy loss spectroscopy, and mass-analyzed ion-kinetic-energy spectrometry.

In mathematics, the spectrum of a matrix is the finite ordered set of eigenvalues of the matrix. (A matrix is a linear transformation of one vector—a finite ordered set of numerical values—into another vector.) In functional analysis, the spectrum of an operator is the countable set of eigenvalues of the (bounded) operator. (An operator is a linear transformation of one function-space vector—an ordered continuum of numerical values called a real-valued function of a real variable—into another function-space vector.) The eigenvectors of a linear time-invariant operator (a convolution) are the set of sinusoidal functions corresponding to all frequencies which comprise the entire set of real numbers. Therefore, the eigenvalues determine the amount the spectral components of a function are scaled when the function is transformed by a convolution. Hence, the use of the term spectrum for the set of eigenvalues. The spectrum of the convolution multiplies the spectrum of the function being convolved to produce the spectrum of the resultant convolved function.

A spectrogram, produced by an apparatus referred to as a spectrograph or spectrometer, especially in acoustics, is a visual representation of the frequency spectrum of, for example, sound as a function of time or another variable.

A spectrometer is a device used to record spectra and spectroscopy is the use of a spectrometer for chemical analysis.

===============

2 Readers in need of a brief remedial review of the prerequisite topic of linear time-invariant transformations and the Fourier transform should consult Appendix I at the end of Chapter 1.

3 In Part II, it is explained that periodic and almost periodic phenomena as well as constant (time-invariant) phenomena satisfy (6). For to be from a constant phenomenon, it must satisfy not only (6) but also

for all

.

4 In some treatments of time-series analysis (see [Jenkins and Watts 1968]), the function (6) modified by subtraction of the mean

from , is called the autocovariance function, and when normalized by

it is called the autocorrelation function.

5 If is the voltage (in volts) across a one-ohm resistance, then

is the power dissipation (in Watts).

6 If is absolutely integrable, then (11) and (12) are the usual Fourier transform pair, but if

is a persistent waveform (which does not die out as

) from a time-invariant phenomenon, then (11) and (12) must be replaced with the generalized (integrated) Fourier transform [Wiener 1930], in which case (14) becomes the Stieltjes integral

[Gardner 1985]. The sine wave in (15) and (16) must be multiplied by

to represent the infinitesimal sinewave components contained in

and

.

7 For a persistent waveform (which does not die out as ) from a time-invariant phenomenon, the property of sinewave components being mutually uncorrelated is deeper than suggested by (22). In particular, the envelopes (from (1)),

and

, of the local sinewave components (cf. Chapter 4, Section E) become uncorrelated in the limit

for all

as explained in Chapter 7, Section C.

8 The limit averaging operation in (23) can be interpreted (via the law of large numbers) as the probabilistic expectation operation.

9 To make the formal manipulation used to obtain (25) rigorous, must be replaced with the envelope of the local sinewave component, which is obtained from (1) with

replaced by

; then the limit,

, must be taken. An in-depth treatment of this topic of spectral correlation is introduced in Chapter 7, Section C, and is the major focus of Part II.

10 The term stochastic comes from the Greek to aim (guess) at.

11 The term ergodic comes from the Greek for work path, which—in the originating field of statistical mechanics—relates to the path, in one dimension, described by , of an energetic particle in a gas.

The Fourier theory of sine wave analysis of functions has its origins in two fields of investigation into the nature of the physical world: acoustical/optical wave phenomena and astronomical and geophysical periodicities.12 These two fields have furnished the primary stimuli from the natural sciences to the classical study—which extends into the first half of the twentieth century—of spectral analysis. The motions of the planets, the tides, and irregular recurrences of weather, with their hidden periodicities and disturbed harmonics, form a counterpart of the vibrating string in acoustics and the phenomena of light in optics. Although the concept of sine wave analysis has very early origins, the first bona fide uses of sine wave analysis apparently did not occur until the eighteenth century, with the work of Leonhard Euler (1707—1783) and Joseph Louis Lagrange (1736—1813) in astronomy [Lagrange l772].13

The concept of statistical spectral analysis germinated in early studies of light, beginning with Isaac Newton’s prism experiment in 1664 which led to the notion that white light is simply an additive combination of homogeneous monochromatic vibrations. The developing wave optics ideas, together with developing ideas from meteorology and astronomy, led Sir Arthur Schuster (1851—1934), around the turn of the nineteenth century, to the invention of the periodogram for application to the problem of detecting hidden periodicities in random data [Schuster 1894, 1897, 1898, 1900. 1904. 1906, 1911]. The periodogram, denoted by (originally defined for discrete-time data), is simply the squared magnitude of the Fourier transform of a finite segment of data,

, normalized by the length,

, of the data segment (graphed versus the frequency variable,

):

(31)

(32)

where is taken to be zero for

. if a substantial peak occurred in the periodogram, it was believed that an underlying periodicity of the frequency at which the peak occurred had been detected. As a matter of fact, this idea preceded Schuster in the work of George Gabriel Stokes (1819—1903) [Stokes 1879]; and a related approach to periodicity detection developed for meteorology by Christoph Hendrik Diederik Buys-Ballot (1817—1890) preceded Stokes [Buys- Ballot 1847]. The first general development of the periodogram is attributed to Evgency Evgenievich Slutsky (1880—1948) [Slutsky 1929, 1934].

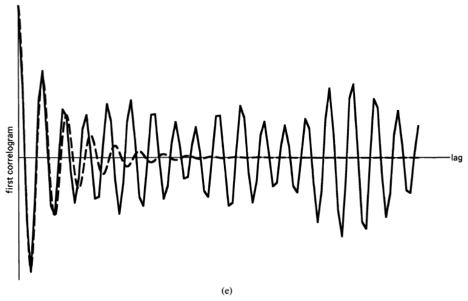

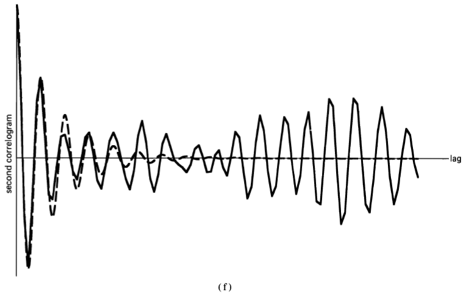

Another approach to detection of periodicities that was being used in meteorology in the early part of the twentieth century was based on the correlogram [Clayton 1917; Alter 1927; Taylor 1920, 1938], whose earliest known use [Hooker 1901] was motivated by the studies in economics of John Henry Poynting (1852— 1914) [Poynting 1884]. The correlogram, denoted by (originally defined for discrete-time data), is simply the time-average of products of time-shifted versions of a finite segment of data (graphed versus the time-difference variable,

),

(33)

But since is zero for

outside

, we obtain

(34)

If an oscillation with occurred in the correlogram, it was believed that an underlying periodicity had been detected.14

The discovery of the periodogram-correlogram relation (e.g., [Stumpff 1927; Wiener 1930]) revealed that these two methods for periodicity detection were, in essence, the same. The relation, which is a direct consequence of the convolution theorem (Appendix 1-1 at the end of Chapter 1) is that and

are a Fourier transform pair (exercise 10):

This relation was apparently understood and used by some before the turn of the century, as evidenced by the spectroscopy work of Albert Abraham Michelson (1852—1931), who in 1891 used a mechanical harmonic analyzer to compute the Fourier transform of a type of correlogram obtained from an interferometer for the purpose of examining the fine structure of the spectral lines of lightwaves.

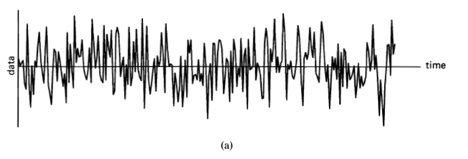

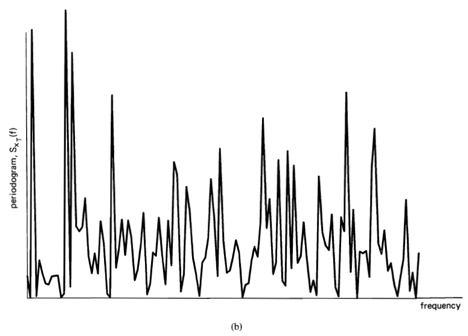

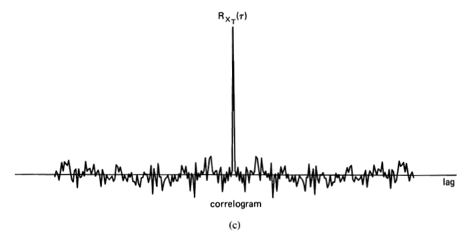

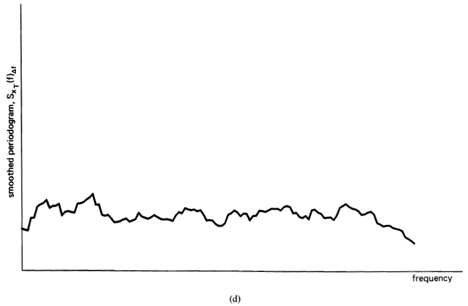

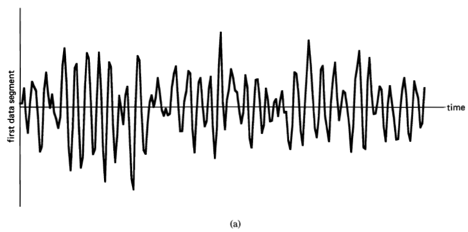

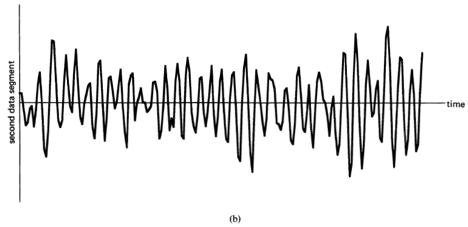

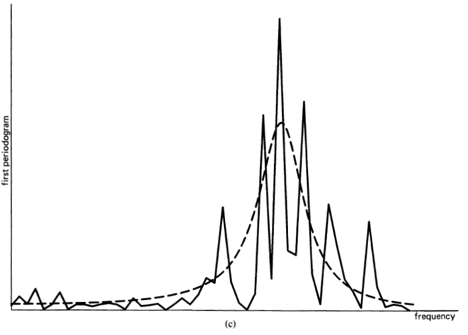

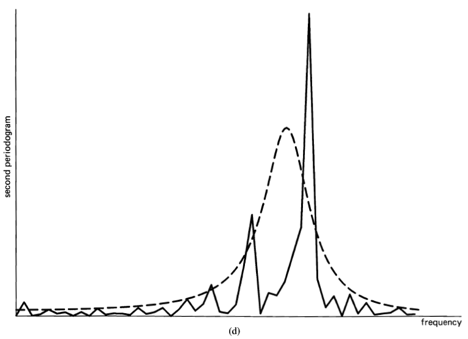

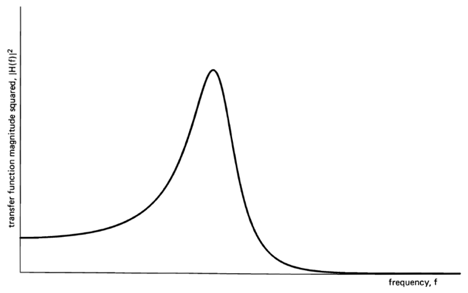

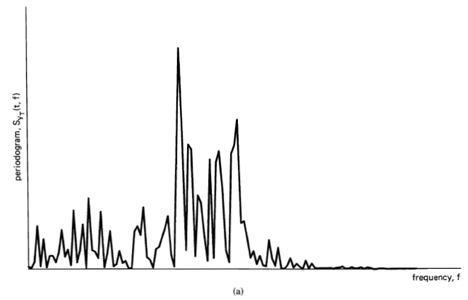

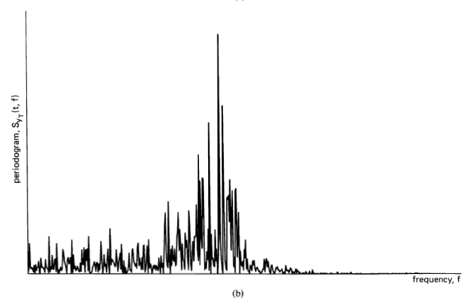

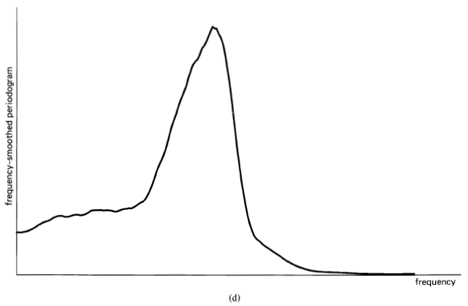

A completely random time-series is defined to be one for which the discrete-time correlogram is asymptotically () zero for all nonzero time-shifts,

, indicating there is no correlation in the time-series. A segment of a simulated completely random time-series is shown in Figure 1-1(a), and its periodogram and correlogram are shown in Figures 1-1(b) and 1-1(c). This concept arose (originally for discrete-time data) around the turn of the century [Goutereau 1906]. and a systematic theory of such completely random time-series was developed in the second decade by George Udny Yule (1871—1951) [Yule 1926]. Yule apparently first discovered the fact that an LTI transformation (a convolution) can introduce correlation into a completely random time series. It is suggested by the periodogram-correlogram relation that a completely random time series has a flat periodogram (asymptotically). By analogy with the idea of white light containing equal amounts of all spectral components (in the optical band), a completely random time series came to be called white noise. As a consequence of the discoveries of the correlation-inducing effect of an LTI transformation, and the periodogram-correlogram relation, it was discovered that a completely random time series, subjected to a narrow-band LTI transformation, can exhibit a periodogram with sharp dominant peaks, when in fact there is no underlying periodicity in the data. This is illustrated in Figure 1-2. This revelation, together with several decades of experience with the erratic and unreliable behavior of periodograms, first established as an inherent property by Slutsky [Slutsky 1927], led during the mid—twentieth century to the development of various averaging or smoothing (statistical) methods for modifying the periodogram to improve its utility. A smoothed version of the periodogram in Figure 1-1(b) is shown in Figure 1-1(d). Such averaging techniques were apparently first proposed by Albert Einstein (1879—1955) [Einstein 1914], Norbert Wiener(1894—1964) [Wiener 19301 and later by Percy John Daniell (1889—1946) [Daniell 1946], Maurice Stevenson Bartlett (1910—) [Bartlett 1948, 1950], John Wilder Tukey (1915—) [Tukey 1949], Richard Wesley Hamming (1915—), and Ralph Beebe Blackman (1904—) [Blackman and Tukey 1958]. In addition, these circumstances surrounding the periodogram led to the alternative time-series-modeling approach to spectral analysis, which includes various methods such as the autoregressive-modeling method introduced by Yule [Yule 1927] and developed by Herman O. A. Wold (1908—) [Wold 1938] and others.

Apparently independent of and prior to the introduction (by others) of empirical averaging techniques to obtain less random measurements of spectral content of random time-

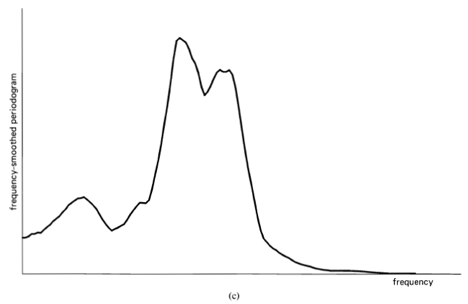

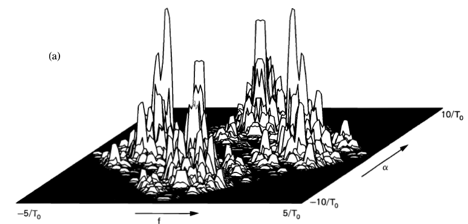

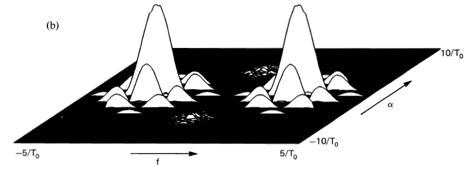

Figure 1-1 (a) Completely random data (white noise), . (b) Periodogram of white noise,

.

Figure 1-1 (continued) (c) Corrrelogram of white noise, . (d) Smoothed periodogram of white noise,

,

.

series, Wiener developed his theory of generalized harmonic analysis [Wiener 1930], in which he introduced a completely nonrandom measure of spectral content. Wiener’s spectrum can be characterized as a limiting form of an averaged periodogram. In terms of this limiting form of periodogram and the corresponding limiting form of correlogram, Wiener developed what might be called a calculus of averages for LTI transformations of time-series. Although it is not well known, 15 Wiener’s limit spectrum and its characterization as the Fourier transform of a limit

Figure 1-2 (a), (b) Two segments of narrow-band data, .

correlogram had been previously presented (in rather terse form) by Einstein [Einstein 1914].